Teradata

https://drive.google.com/file/d/1vevk2HJLngFs0PFqvefq4h_pbt1dB73t/view

Features of Teradata

📘 What is a Data Mart?

A Data Mart is a subject-specific subset of a data warehouse that focuses on a specific department, function, or business area (e.g., marketing, sales, HR).

==============================================================

Components of Teradata

Teradata is a very efficient, less expensive, and high-quality Relational Database management System.

Teradata is based on Massively Parallel Processing (MPP) architecture. It is made of Parsing Engine (PE), BYNET, Access Module Processors (AMPs), and other components such as nodes.

Below are some significant components of the Teradata, such as:

1. Parsing Engine: The Parsing Engine is a virtual processor (vproc) that interprets SQL requests, receives input records, and passes data. To do that, it sends the messages over the BYNET to the AMPs (Access Module Processor).

2. BYNET: This is the message-passing layer or simply the networking layer in Teradata. It receives the execution plan from the parsing engine and passes it to AMPs and the nodes. After that, it gets the processed output from the AMPs and sends it back to the parsing engine.

To maintain adequate availability, the BYNET 0 and BYNET 1 two types of BYNETs are available. This ensures that a secondary BYNET is available in case of the failure of the primary BYNET.

3. Access Module Processors (AMPs): These are the virtual processors of Teradata. They receive the execution plan and the data from the parsing engine. The data will undergo any required conversion, filtering, aggregation, sorting, etc., and will be further sent to the corresponding disks for storage.

The AMP is a virtual processor (vproc) designed for and dedicated to managing a portion of the entire database. It performs all database management functions such as sorting, aggregating, and formatting data. The AMP receives data from the PE, formats rows, and distributes them to the disk storage units it controls. The AMP also retrieves the rows requested by the Parsing Engine.

Table records will be distributed to each AMP for data storage. Only that AMP can read or write data into the disks that have access permission.

4. Nodes: The basic unit of a Teradata system is called a Node. Each node has its operating system, CPU memory, a copy of RDBMS software, and some disk space. A single cabinet can have one or more nodes in it.

The Parallel Database Extensions is a software interface layer that lies between the operating system and database. It controls the virtual processors (vprocs).

6.Disks

Disks are disk drives associated with an AMP that store the data rows. On current systems, they are implemented using a disk array.🔄 Query Execution Flow in Teradata

-

Query Submission

A user or application submits an SQL query. -

Parsing Engine (PE)

-

Parser: Checks SQL syntax and semantics.

-

Security: Validates user access.

-

Optimizer: Generates the most cost-effective execution plan.

-

Dispatcher: Sends the execution steps to BYNET.

-

-

BYNET

Routes the steps from the PE to the appropriate AMPs. -

AMPs (Access Module Processors)

-

Execute the steps (e.g., read/write data).

-

Retrieve or update data from their own disks.

-

Send results back to the PE.

-

-

PE (again)

-

Assembles the rows.

-

Sends the final result back to the client.

🔧 Parsing Engine (PE) – Teradata

A Parsing Engine (PE) is a virtual processor (vproc) that manages communication between a client application and the Teradata RDBMS. Each PE can handle up to 120 sessions, with each session capable of handling multiple SQL requests.

---------------------------------------------------------

🧠 What is BYNET in Teradata?

🧠 What is an AMP in Teradata?

The Access Module Processor (AMP) is a virtual processor (vproc) responsible for managing a portion of the database in Teradata. Each AMP owns and operates on a subset of the data stored on its own disk (vdisk), working independently in a shared-nothing architecture.

-----------------------------------------------------------------------------🖥️ What is a Node in Teradata?

A Node is a physical server in the Teradata system. It includes both hardware and software components that support the execution of Teradata’s parallel database processing.

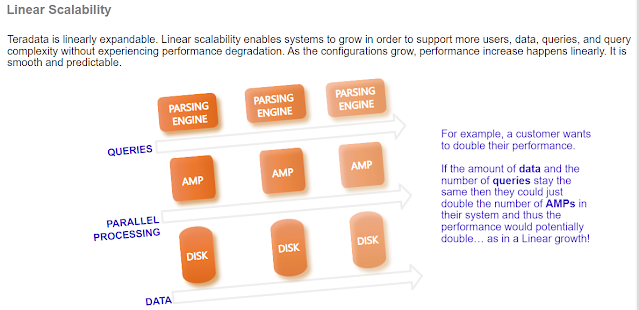

⚙️ Parallelism in Teradata: At the Core of Performance

Parallelism is a fundamental design principle in Teradata, enabling it to efficiently process massive volumes of data. Almost every component of Teradata is designed to operate in parallel — from query parsing to data retrieval and response.

Multi-value Compression

Multi-value compression has three variations:- COMPRESS – ONLY Nulls are compressed.

- COMPRESS NULL – Nulls are compressed. (COMPRESS & COMPRESS NULL mean the same thing)

- COMPRESS <constant> – Nulls and the specified <constant> value are compressed.

Algorithmic Compression

When column values are mostly unique, Algorithmic Compression can provide better compression results than Multi-value Compression. You can define your own compression and decompression algorithms and apply them to data at the column level.A user can create their own compression algorithms as external C/C++ scalar UDFs, and then specify them in the column definition of a CREATE TABLE/ALTER TABLE statement. Teradata invokes these algorithms to compress and decompress the data when the data is inserted into or retrieved from the table.

ALC allows you to implement the compression scheme that is most suitable for data in a particular column. The cost of compression and decompression depends on the algorithm chosen.

Block Level Compression

Performs compression on whole data blocks at the file system level before they are written to storage.

🔷 1. Tables

-

Core structure for storing data.

-

Consist of rows (records) and columns (fields).

-

Supports indexing, primary keys, foreign keys, etc.

🔷 2. Views

-

Virtual tables based on one or more tables or views.

-

Do not store data physically—just SQL definitions.

-

Use cases:

-

Restrict access to certain columns/rows.

-

Simplify complex joins/queries.

-

Standardize reporting logic.

-

-

X-Views: Special views that only show data specific to the accessing user.

🔷 3. Macros

-

Store predefined SQL commands under a name.

-

Can accept parameters.

-

Useful for repeating business logic or securing access.

-

Example:

🔷 4. Triggers

-

A set of SQL statements that automatically executes in response to data changes (INSERT, UPDATE, DELETE).

-

Attached to a table.

-

Used for enforcing rules or auditing changes.

🔷 5. Stored Procedures

-

A program written in SQL and stored on the database.

-

Can contain control-of-flow logic (IF, LOOP, etc.).

-

Can be scheduled, parameterized, or reused.

-

Example use case: ETL workflows, validations, auditing.

-

Supports error handling and transaction control.

🔷 6. User-Defined Functions (UDFs)

-

Custom functions written in C/C++ or SQL.

-

Types:

-

Scalar UDFs – return a single value.

-

Aggregate UDFs – return a summarized value.

-

Table UDFs – return a table.

-

-

Used for:

-

Complex calculations.

-

Reusability.

-

Protecting PII (Personally Identifiable Information).

-

🔷 7. Indexes

-

Used to optimize data access.

-

Types:

-

Primary Index (UPI/NUPI) – determines data distribution.

-

Secondary Index – improves access for non-primary keys.

-

Join Index – speeds up joins.

-

Hash Index – supports fast lookups (less common now).

SPACE

🔍 1. Perm Space

-

Definition: Maximum allowed space a user/database can occupy permanently.

-

Not pre-allocated, only consumed as needed.

-

Used for:

-

Table data (rows)

-

Stored procedures

-

User-Defined Functions (UDFs)

-

-

Required for creating tables.

🔍 2. Spool Space

-

Definition: Temporary space used during SQL execution.

-

Used for:

-

Sorting

-

Joining

-

Aggregation

-

Storing query results

-

-

Reclaimed automatically when the query ends.

-

Inherits limits from parent user/database unless explicitly set.

📌 Example:

You have 100 GB total disk space.

10 GB used for user data

10 GB for overhead

❓ Available spool space?

✅ Answer: 80 GB

🔍 3. Temp Space

-

Used for:

-

Global Temporary Tables (GTTs)

-

-

Persistence: Lasts until session ends.

-

Tables created in Temp Space survive restarts (not the data).

-

Treated like Perm Space, but temporarily allocated for session data.

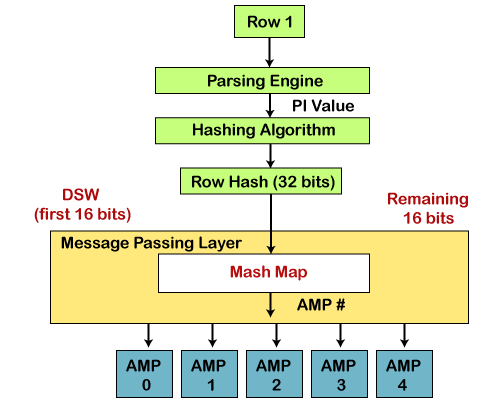

🚀 How Teradata Distributes Rows Across AMPs

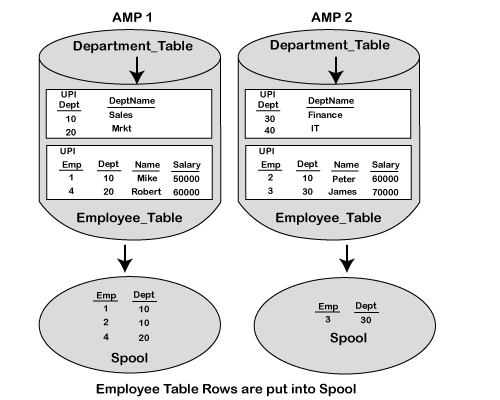

In Teradata, row distribution is fully automatic, even, and hash-based. The core idea is that each AMP (Access Module Processor) is responsible for a portion of each table’s data, and Teradata ensures the rows are distributed evenly using a hashing algorithm.

🔁 Hash-Based Distribution Mechanism

When a row is inserted into a table:

-

Teradata hashes the Primary Index column(s) value.

-

The resulting hash value is mapped to an AMP.

-

That AMP stores the row on its associated disk.

📌 Hashing is done on Primary Index (PI) — it’s how Teradata determines where to store a row.

🎯 Why Even Distribution Matters

-

Ensures parallelism is fully utilized.

-

Avoids skew (when some AMPs hold significantly more rows than others).

-

Prevents slowest AMP bottleneck in full table operations.

✅ Ideally:

-

Each table's rows are evenly distributed across all AMPs.

-

All AMPs perform roughly equal work on large queries.

✅ Best Practices

-

Choose PKs based on business rules and uniqueness.

-

Choose PIs based on query patterns, join usage, and data distribution.

-

Don't always assume PK = PI. Analyze based on performance needs.

📌 Primary Index (PI) in Teradata

The Primary Index (PI) is the core mechanism for distributing rows across AMPs and retrieving data efficiently.

✅ Key Characteristics

| Attribute | Description |

|---|---|

| Purpose | Determines how rows are distributed and accessed |

| When defined? | At CREATE TABLE time only – cannot be altered later |

| Max Columns | Can consist of 1 to 64 columns |

| Stored in | Data Dictionary (DD) |

🔄 Row Distribution Mechanism

-

Teradata uses a hashing algorithm on the PI column(s).

-

Hash result determines the AMP where the row will be stored.

-

Leads to parallelism across AMPs.

🧩 Types of Primary Index

| Type | Description | Impact |

|---|---|---|

| UPI (Unique Primary Index) | No duplicate values allowed | Ensures even row distribution, no duplicate check during loads, 1-AMP access |

| NUPI (Non-Unique Primary Index) | Duplicate values allowed | May cause data skew, slower joins/queries if not carefully chosen |

🧠 Best Practices for Choosing a PI

-

Choose a highly unique column to avoid skew (UPI preferred).

-

Consider query access patterns (frequent filters, joins).

-

Try to align PI with foreign keys in child tables for AMP-local joins.

-

Avoid columns with few distinct values (e.g., Gender, Status) as PI → causes skew.

📈 Why PI Matters for Performance

-

Every row access by PI is a 1-AMP operation → fastest.

-

Good PI choice = even AMP workload → maximum parallelism.

-

Bad PI choice = skew → one AMP overworked, others underutilized.

🚫 Common Mistakes

-

Using low cardinality columns as PI (e.g., "India", "Yes/No").

-

Forgetting that PI ≠ PK in Teradata.

-

Choosing PI without analyzing access patterns.

🔄 Row Distribution via Hashing in Teradata

📥 How Data Gets Distributed Across AMPs

When a row is inserted into a Teradata table:

-

The Primary Index (PI) value of the row is input into a hashing algorithm.

-

The algorithm outputs a 32-bit Row Hash.

-

The high-order 16 bits are used to find a Hash Bucket Number.

-

The Hash Map uses that bucket number to determine which AMP will store the row.

-

The AMP stores the row in its associated vDisk.

🔄 UPI Row Distribution

🔹 What is a NUPI?

-

A Non-Unique Primary Index allows duplicate values in the index column(s).

-

The hashing algorithm still determines the target AMP, but:

-

All rows with the same NUPI value go to the same AMP.

-

This can lead to uneven (skewed) distribution.

📊 Example: Skew from Poor NUPI Choice

If you choose

Order_Status(values = "C", "O") as a NUPI:-

All rows get hashed to just 2 AMPs.

-

Remaining AMPs stay idle → severe performance degradation.

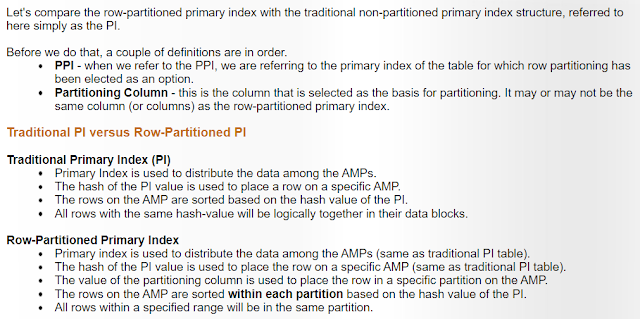

🔀 Partitioned Primary Index (PPI)

🔹 What is PPI?

PPI is a Teradata feature that divides rows into partitions based on a defined column or expression, while still distributing rows across AMPs based on the Primary Index (PI).

Key Benefits:

-

Improves query performance for range-based queries.

-

Allows partition elimination, where irrelevant partitions are excluded at query time.

-

Ideal for incremental loads, archiving, and deletions.

⚙️ How PPI Works:

-

Hashing for Distribution:

-

PI → Hash Value → Determines which AMP stores the row.

-

-

Partitioning for Storage Order:

-

Once inside an AMP, rows are grouped into partitions.

-

Within each partition, data is sorted by Row Hash (row ID).

-

-

Partition Elimination:

-

The optimizer analyzes query filters (e.g.,

WHERE order_date BETWEEN …) and skips partitions that won’t qualify. -

This reduces I/O and scan time dramatically.

-

🌲 Multilevel Partitioned Primary Index (MLPPI)

🔹 What is MLPPI?

MLPPI allows sub-partitioning — i.e., a partition within a partition — for greater granularity and performance.

Example Use Case:

-

Partition 1: by

Claim_Date -

Sub-partition: by

State

This improves query efficiency when filters match both levels, enabling multi-level partition elimination.

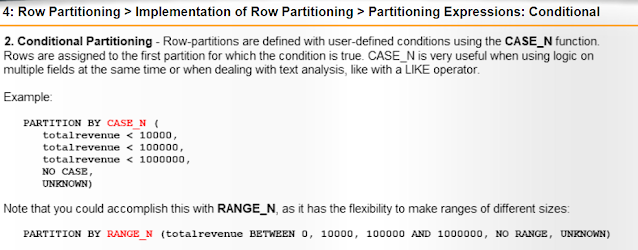

In Teradata, when using Multi-Level Partitioned Primary Index (MLPPI), you can define the partitions using either:

-

RANGE_N— for range-based partitioning (like dates, numbers) -

CASE_N— for custom logic or discrete values

🧩 Use RANGE_N When:

-

You are partitioning by a range of values (e.g., dates or numeric ranges).

-

Best for time-series data, like logs or transaction tables.

🧩 Use CASE_N When:

-

You want to partition using categorical values, custom conditions, or non-uniform ranges.

-

Good when data doesn't fit into neat, equal ranges.

✅ What is a NoPI Table?

A NoPI (No Primary Index) Table is a table that does not have a defined Primary Index. This was introduced in Teradata 13.0 to improve bulk load performance and simplify certain workloads.

CREATE TABLE table_x (col_x INTEGER ,col_y CHAR(10) ,col_z DATE) NO PRIMARY INDEX;

📦 Why Use NoPI Tables?

-

Ideal for staging or landing tables in ETL pipelines.

-

Great for bulk loading large volumes of data quickly.

-

Often used temporarily before redistributing data into final structured tables.

🧠 Best Practices:

-

Use NoPI tables for staging or intermediate ETL steps.

-

Avoid long-term use unless you really do not need joins or indexed access.

-

After loading, redistribute data into base tables with a PI for performance.

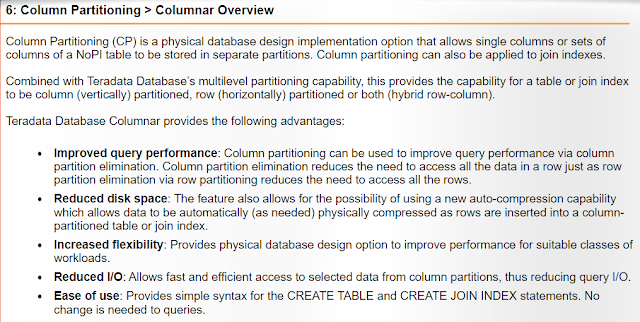

Introduced in Teradata 14.0, Columnar is a new way of physically organizing data by partitioning a table’s columns into separate storage units, rather than rows.

It can be applied to:

-

Tables (especially NoPI tables)

-

Join Indexes

You can also combine Column Partitioning with Row Partitioning (MLPPI) for a multi-dimensional partitioning strategy.

📘 Columnar Join Indexes:

A Column-Partitioned Join Index helps queries without changing the base table.

🔹 Must follow these rules:

-

Single-table only

-

No aggregate or compression

-

No PI or value ordering

-

Must include RowID of base table

-

Can be sparse or row-partitioned

Example use: Supporting BI reports where only a few columns are accessed repeatedly.

🎯 When to Use Columnar:

-

When most queries touch few columns.

-

When needing high compression and fast scans.

-

In CPU-rich environments.

-

As staging for analytics or data marts.

Teradata: Secondary Index (SI) Explained

A Secondary Index (SI) in Teradata is a physical access path to the data that is separate from the Primary Index (PI). It is used to improve query performance when the query's search condition does not use the PI columns.

🔍 Why Use a Secondary Index?

-

When queries frequently use non-PI columns in WHERE conditions.

-

To avoid full table scans.

-

To reduce query response time.

-

To optimize joins or aggregations on non-PI columns.

🛠️ How It Works

-

When you define a SI, Teradata creates a sub-table that holds:

-

The index value.

-

The row ID (ROWID) of the corresponding data row in the base table.

-

-

For USI, the sub-table is hash-distributed.

-

For NUSI, the sub-table is locally stored on the same AMP as the base table row (no redistribution).

🚦 Performance Tips

-

USI: Great for point queries (e.g.,

WHERE emp_name = 'Alice') -

NUSI: Better for range queries (e.g.,

WHERE salary BETWEEN 50000 AND 70000) -

Too many SIs can increase storage and slow down DML operations like INSERT/UPDATE.

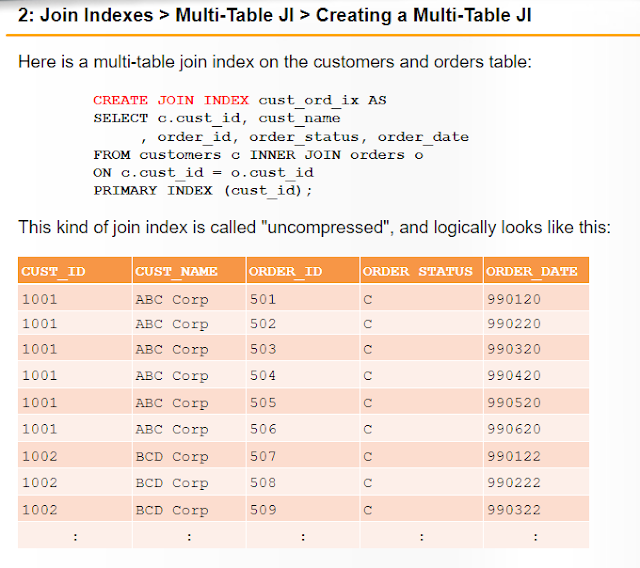

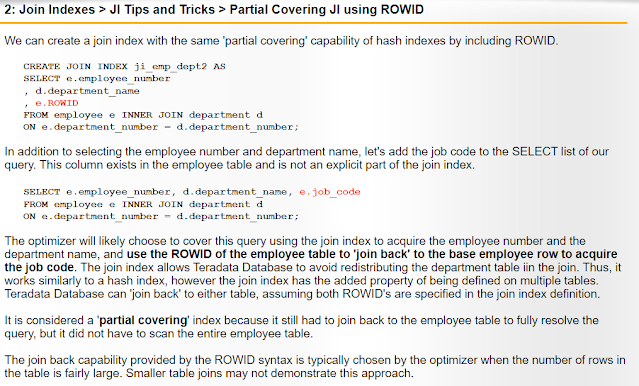

🔷 What is a Join Index (JI)?

A Join Index is a system-maintained physical structure that stores pre-joined or pre-aggregated data to:

-

Eliminate base table joins

-

Reduce data redistribution

-

Avoid aggregate processing

-

Improve query response time

🔷 What is a Single-Table Join Index (STJI)?

A Single-Table Join Index is a special type of join index that:

-

Is defined on only one table.

-

Uses a different column as its Primary Index (PI) than the base table’s PI.

-

Helps optimize joins between foreign keys and primary keys—especially in star schema or snowflake models.

-

Avoids row redistribution and base table access during queries.

🧩 Why Use STJI?

Teradata stores rows based on the PI. If you frequently join on a non-PI column (e.g., a foreign key), Teradata may redistribute data to perform the join.

✅ STJI prevents redistribution by pre-sorting and rehashing rows using the foreign key (or any chosen column).

What This Does:

-

Creates a copy of the

orderstable, hashed oncustomer_idinstead oforder_id. -

When a query joins

orders.customer_idtocustomers.customer_id, Teradata can use this index. -

No data redistribution needed because both tables are now hashed on the same column.

🔍 What is a Full Table Scan (FTS)?

A Full Table Scan happens when Teradata (or any database engine) reads every row in a table to find rows that match a query’s condition—because no index or partitioning strategy can narrow it down.

⚠️ This is often the least efficient way to access data, especially for large tables.

❌ What happens here?

-

The Primary Index (PI) is on

emp_id, but the query filters ondept_id, which is not indexed. -

Result: Teradata must read every row to find those where

dept_id = 10→ Full Table Scan.

📌 What is COLLECT STATISTICS in Teradata?

COLLECT STATISTICS (a.k.a. COLLECT STATS) gathers data demographics (like row count, value distribution, uniqueness) on columns, indexes, or partitions.

✅ These stats are used by the Teradata Optimizer to:

-

Choose optimal query plans

-

Decide whether to use an index or full table scan

-

Improve join strategies, aggregations, and access paths

💡 Why It Matters

Without stats, Teradata assumes uniform distribution and guesses row counts, which can result in:

-

Wrong join order

-

Full table scans

-

Skewed AMP workload

-

Longer response times

📊 What Does It Collect?

-

Distinct values count

-

Null count

-

Min/Max values

-

Frequency histogram

-

Row count

-

Skew factor

These help the optimizer estimate:

-

Selectivity (how many rows a filter returns)

-

Join cardinality

-

Join cost and order

-

Whether to use an index, join index, or full table scan

📦 What is COMPRESS in Teradata?

COMPRESS is a column-level compression feature in Teradata that reduces disk storage by storing repeated values only once and referencing them internally.

✅ It’s especially effective in large tables with:

-

Repeating values (like status = ‘Active’)

-

Nullable columns with many nulls

-

Low-cardinality columns (few distinct values)

🧱 How COMPRESS Works

Instead of storing the full value for every row, Teradata:

-

Stores common/repeating values only once in the table header.

-

Rows with those values point to the compressed value.

-

Values not listed in

COMPRESSare stored normally.

🔐 Teradata Data Protection Features

Teradata provides multi-layered protection, both at the system level (hardware & OS) and database level (AMP-level redundancy, journaling, and transaction integrity).

🔁 Real-world analogy

Imagine a shopping mall with multiple backup generators (nodes) and alternate power cables (buses). If one cable or generator fails, power (data processing) continues seamlessly.

🗃️ DATABASE-LEVEL PROTECTION

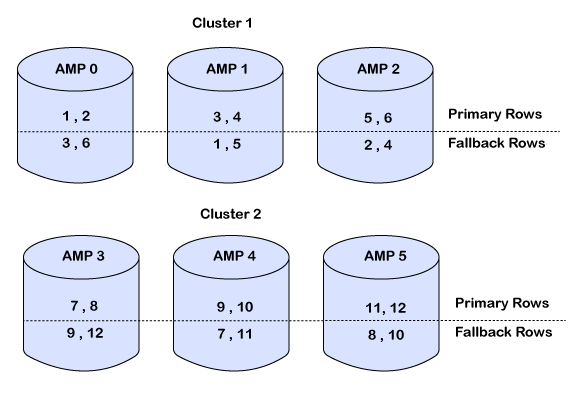

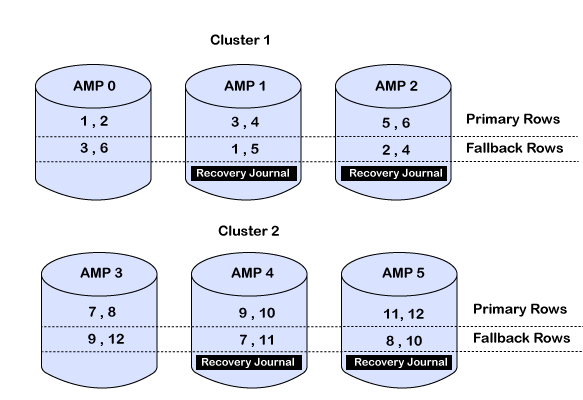

1. Fallback

-

Each row stored twice on different AMPs (primary + fallback copy).

Fallback is a data protection feature in Teradata that ensures AMP-level fault tolerance by keeping a duplicate copy of each row of a table on a different AMP in the same cluster.

-

If one AMP goes down, fallback AMP serves the data.

✅ Transparent to users, but requires:

-

2x disk space

-

Extra I/O for write operations

🔁 Real-world analogy:

Imagine every important document is kept in two different filing cabinets. If one cabinet is lost in a fire (AMP failure), the second one keeps business running.

📦 How It Works:

-

For every row inserted into a Fallback table, a second copy is created.

-

The primary row goes to one AMP (based on hashing).

-

The fallback row is stored on a different AMP in the same cluster.

-

If an AMP fails, queries will automatically read from the fallback AMP.

🧠 Example:

Suppose a table employees is defined with Fallback.

-

Row

emp_id = 101hashes to AMP 1 → primary row is stored here. -

Teradata automatically stores a fallback copy on AMP 2 (same cluster).

If AMP 1 fails, Teradata accesses the copy on AMP 2 seamlessly — no data loss and no user disruption.

2. Transient Journal (TJ)

The Transient Journal (TJ) is a temporary, automatic rollback mechanism used to protect data integrity during a transaction.

Keeps before images of rows during transactions.

To ensure atomicity in transactions — either all changes are committed, or none (rollback if failure occurs).

-

If transaction fails → rollback to original state.

🧩 How it works:

-

During a transaction, before any row is changed, the "before image" of the row is saved in the Transient Journal on the same AMP.

-

If the transaction succeeds (gets an

END TRANSACTION), the saved copies are discarded. -

If the transaction fails (e.g., AMP crash, session drop), the changes are rolled back using the saved before images.

✅ Fully automatic and transparent to the user.

3. Down AMP Recovery Journal

-

Activated when an AMP goes down.

-

Tracks changes that would have affected that AMP.

-

Ensures no data is lost during recovery.

4. Permanent Journal (Optional)

-

Logs before/after images permanently for auditing or full rollback.

-

Useful for regulatory or historical tracking.

5. Locks

-

Ensure data consistency.

-

Prevents multiple users from changing same data simultaneously.

🔐 Types: Read lock, Write lock, Exclusive lock, etc.

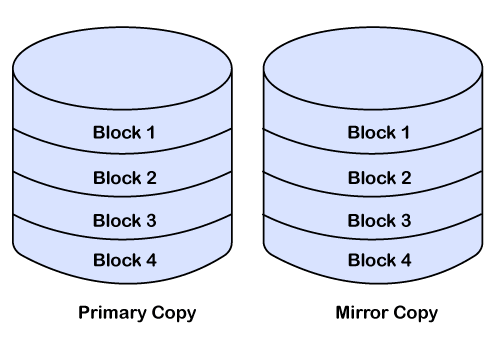

🛡️ What is RAID?

RAID (Redundant Array of Independent Disks) is a hardware-level protection mechanism that improves data availability and fault tolerance by distributing or duplicating data across multiple disk drives.

RAID is different from Fallback, which is a Teradata software-level protection strategy.

🔰 Types of RAID Supported in Teradata

🔁 RAID 1 – Mirroring

-

How it works: Every disk has an exact mirror copy on another disk.

-

Redundancy: 100%

-

If one disk fails: The mirrored disk is used — no performance loss.

-

Disk Space Cost: High (needs 2× storage).

-

Performance: Excellent, especially for read operations.

✅ Recommended for high-performance, critical systems.

🧮 RAID 5 – Parity-based

-

How it works: Data is striped across 3 disks, and a parity block is stored on a 4th disk. This parity helps reconstruct any one missing block.

-

Redundancy: Can tolerate one disk failure per rank.

-

If a disk fails: Data is recalculated using parity — performance decreases slightly.

-

Disk Space Cost: Low (more efficient than RAID 1).

-

Performance: Good under normal conditions, slower during recovery.

✅ Used where cost-efficiency is more important than top-tier speed.

🧮 RAID S – (RAID 5 variant used with EMC)

-

Similar to RAID 5

-

Found in EMC systems

-

Parity and striping logic is optimized for EMC array design

📘 What is BTEQ in Teradata?

🚀 When to Use BTEQ

-

Running SQL jobs in batch mode (cron jobs or scheduled tasks)

-

Exporting large volumes of data from Teradata

-

Loading data quickly into staging tables

-

Writing ETL-style scripts

-

Running SQL scripts with conditional or iterative logic

- Loads only empty tables: Target table must be empty before loading.

- Supports only INSERT operations: Cannot perform updates or deletes.

- Requires no secondary indexes or referential integrity on target table.

- Uses multiple sessions (parallelism) for speed.

- Supports error handling with error tables.

- Supports checkpoints for recovery.

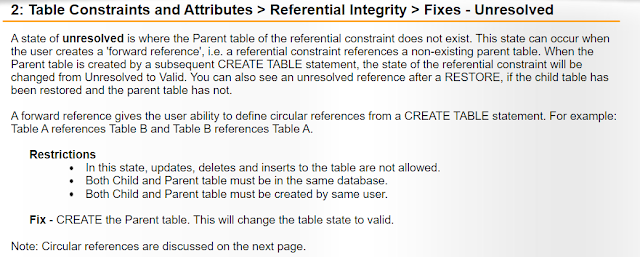

In Teradata, a table constraint is a rule applied at the table level (not just column level) to enforce data integrity. Table constraints are typically used for:

-

Composite primary keys

-

Composite unique constraints

-

Foreign key constraints referencing multiple columns

-

Check constraints on multiple columns

✅ Types of Table-Level Constraints in Teradata

| Constraint Type | Purpose | Declared At |

|---|---|---|

PRIMARY KEY | Enforces row uniqueness | Table-level / Column-level |

UNIQUE | Ensures column or column group is unique | Table-level / Column-level |

FOREIGN KEY | Enforces referential integrity | Table-level |

CHECK | Restricts values in rows | Table-level |

📌 Examples of Table Constraints in Teradata

1. Composite PRIMARY KEY (Table-level)

This ensures each

(order_id, product_id)pair is unique.

2. Composite UNIQUE Constraint

Ensures no two employees have the same email + phone combo.

3. Table-Level FOREIGN KEY Constraint

4. CHECK Constraint

Restricts values based on multiple column logic.

🧠 Notes for Teradata:

-

Foreign key and check constraints are declarative only in Teradata—they are not enforced by the database engine.

-

Use referential integrity manually via joins or scripts if enforcement is required.

Relational Database Terminology

| Set Theory Terms | Relational Database Term |

|---|---|

| Relation | Table |

| Tuple | Row |

| Attribute | Column |

Tables

Tables are the basic unit in RDBMS where the data is stored. Tables are two-dimensional objects consisting of rows and columns. Data is organized in tabular format and presented to the users of a relational database.

References between tables define the relationships and constraints of data inside the tables themselves.

Columns

A column always contains the same kind of information or contains similar data.

Row

A row is one instance of all the columns in the table.

DATabase

The database is a collection of logically related data. Many users access them for different purposes.

For example, a sales database contains complete information about sales, which is stored in many tables.

Primary Key

The primary key is used to identify a row in a table uniquely. No duplicate values are allowed in a primary key column, and they cannot accept NULL values. It is a mandatory field in a table.

Foreign Key

Foreign keys are used to build a relationship between the tables. A foreign key in a child table is defined as the primary key in the parent table.

A table can have more than one foreign key. It can accept duplicate values and also null values. Foreign keys are optional in a table.

Types of Table

🔹 1. Permanent Table

-

Default type of table.

-

Stores persistent data that is not deleted after a session ends.

-

Data is written to disk and protected using fallback or RAID.

🧾 Example:

🔹 2. Volatile Table

-

Temporary table only available during the session.

-

Data is stored in spool space and lost after logout.

-

Good for staging or session-specific processing.

🧾 Example:

🔹 3. Global Temporary Table (GTT)

-

Table definition persists, but data is session-specific.

-

Data is deleted at the end of each session.

-

Requires ON COMMIT clause.

🧾 Example:

🔹 4. Derived Table

-

Virtual table created in a subquery.

-

Exists only for the duration of the query.

-

Cannot be reused.

🧾 Example:

🔹 5. Queue Table

-

Used for asynchronous processing (message queuing).

-

Follows FIFO (First In First Out) principle.

-

Supports special operations like

READQandCONSUME.

🧾 Example:

🔹 6. Multiset Table

-

Allows duplicate rows.

-

Must be explicitly specified.

🧾 Example:

🔹 7. Set Table

-

Does not allow duplicate rows.

-

Teradata performs a duplicate row check on insert.

🧾 Example:

🔹 8. NoPI Table (No Primary Index)

-

Rows are not hashed by PI, but distributed round-robin.

-

Good for staging and bulk loading.

-

Can reduce skew in large loads.

🧾 Example:

🔹 9. Column-Partitioned Table (Columnar)

-

Stores data column-wise instead of row-wise.

-

Improves performance for analytic queries on few columns.

🧾 Example:

🔹 10. Fallback Table

-

Any table (except volatile and queue) can be fallback.

-

Stores duplicate copy of each row on a different AMP for data protection.

🧾 Example:

Teradata Data Manipulation

DROP TABLE <tablename>;

INSERT INTO <tablename> (column1, column2, column3)

VALUES (value1, value2, value3);

INSERT INTO target_table (column1, column2, column3)

SELECT column1, column2, column3

FROM source_table;

- We can update one or more values of the table.

- If the WHERE condition is not specified, then all rows of the table are impacted.

- We can update a table with the values from another table.

- DELETE FROM <tablename>

- [WHERE condition];

Rules

Here are some specific rules to delete records from the tables, such as:

- We can update one or more records of the table.

- If the WHERE condition is not specified, then all rows of the table are deleted.

- We can update a table with the values from another table.

Teradata Set Operator

SET operators combine results from multiple SELECT statements. This may look similar to Joins, but joins combines columns from various tables, whereas SET operators combine rows from multiple rows.

Rules for Set Operator

Here are the following rules to specify the Set operator, such as:

- The number of columns from each SELECT statement should be the same.

- The data types from each SELECT must be compatible.

- ORDER BY should be included only in the final SELECT statement.

Teradata SQL Set Operators

| Set Operator | Function |

|---|---|

| INTERSECT | It returns result in rows that appear in all answer sets generated by the individual SELECT statements. |

| MINUS / EXCEPT | The result is those rows returned by the first SELECT except for those also selected by the second SELECT. |

| UNION | It combines the results of two or more SELECT statements. |

1. UNION

The UNION statement is used to combine results from multiple SELECT statements. It ignores duplicates.

Example

Consider the following student table as T1 and attendance table as T2.

| RollNo | FirstName | LastName | BirthDate |

|---|---|---|---|

| 1001 | Mike | Richard | 1/2/1996 |

| 1002 | Robert | Williams | 3/5/1995 |

| 1003 | Peter | Collin | 4/1/1994 |

| 1004 | Alexa | Stuart | 11/6/1995 |

| 1005 | Robert | Peterson | 12/1/1997 |

| RollNo | present | Absent | % |

|---|---|---|---|

| 1001 | 200 | 20 | 90% |

| 1002 | 160 | 60 | 72% |

| 1003 | 150 | 70 | 68% |

| 1004 | 210 | 10 | 95% |

The following UNION query combines the RollNo value from both T1 and T2 tables.

When the query is executed, it gives the following output, such as:

RollNo 1001 1002 1003 1004 1005

2. UNION ALL

UNION ALL statement is similar to the UNION statement. It combines results from multiple tables, including duplicate rows.

Syntax

Following is the basic syntax of the UNION ALL statement.

Example

Following is an example for UNION ALL statement.

When the above query is executed, it produces the following output. And it returns the duplicates also.

RollNo 1001 1002 1003 1004 1005 1001 1002 1003 1004

3. INTERSECT

INTERSECT command is also used to combine results from multiple SELECT statements.

It returns the rows from the first SELECT statement that has a corresponding match in the second SELECT statement.

Syntax

Following is the basic syntax of the INTERSECT statement.

Example

Following is an example of the INTERSECT statement. It returns the RollNo values that exist in both tables.

When the above query is executed, it returns the following records. RollNo 1005 is excluded since it doesn't exist in the T2 table.

4. MINUS/EXCEPT

MINUS/EXCEPT commands combine rows from multiple tables and return the rows, which are in the first SELECT but not in the second SELECT. They both return the same results.

Syntax

Following is the basic syntax of the MINUS statement.

Example

Following is an example of a MINUS statement.

When this query is executed, it gives the following output.

RollNo 1005

Teradata String Manipulation

Teradata provides several functions to manipulate the strings. These functions are compatible with the ANSI standard.

Teradata String Functions are also supported most of the standard string functions along with the Teradata extension to those functions.

| String function | Explanation |

|---|---|

| Concat (string1, ..., stringN) | It returns the concatenation of two or more string values. This function provides the same functionality as the SQL-standard concatenation operator (||). |

| Length (string) | It returns the number of characters in the string. |

| Lower (string) | It converts a string to lower case. |

| Upper (string) | It converts a string to upper case. |

| Lpad (string, size, padstring) | Pads the left side of the string with characters to create a new string. |

| Rpad (string, size, padstring) | Pads the right side of the string with characters to create a new string. |

| Trim (string) | It removes leading and trailing whitespace from the given string. |

| Ltrim (string) | It removes leading whitespaces from the string. |

| Rtrim (string) | It removes trailing whitespaces from the string. |

| Replace (string, search) | It removes the search string from the given string. |

| Replace (string, search, replace) | It replaces all instances of search with replacing string. |

| Reverse (string) | It returns string characters in reverse order. |

| Split (string, delimiter) | Split given string on the delimiter. This function returns an array of string. |

| Strops (string, substring) | It returns the staring position first instance of a substring in a given string. |

| Position (substring IN string) | It returns the staring position first instance of a substring in a given string. |

| Substr (string, start, length) | It returns a substring of string that begins at the start position and is length characters long. |

| Chr (n) | It returns the character with the specified ASCII value. |

| to_utf8 (string) | It encodes a string into a UTF-8 varbinary representation. |

| from_utf8 (binary) | It decodes a UTF-8 encoded string from binary. |

| Select translate (string, from, to); | It can replace any character in the string that matches a character in the form set with the corresponding character in the set. |

| Index (string) | It locates the position of a character in a string (Teradata extension). |

UPPER & LOWER Function

The UPPER and LOWER functions convert the character column values all in uppercase and lowercase, respectively. UPPER and LOWER are ANSI compliant.

Syntax

Example

The following example will convert the "Robert" string in the upper case string.

After executing the above code, it will give the following output.

ROBERT

Now in the same example, we will convert the same "ROBERT" string in the lower case string.

Output

robert

CHARACTER_LENGTH Function

The CHARACTER_LENGTH function returns the numbers of characters of a character string expression.

- The result will be an integer number that represents the length.

- The result will be the same for the fixed-length character.

- The result will vary for the variable-length character.

- Spaces are valid characters so that the length will be counted for space.

Syntax

Example

The following example will return the number of characters of the "Robert" string.

Execute the above code, and it tells the length of the "Robert" string as the output shown below.

6

TRIM Function

The TRIM function is used to remove space a particular set of leading or trailing or both from an expression. TRIM is ANSI standards.

Syntax

Example

The following example removes the space from both the end of the "Robert" string.

When we execute the above code, it will trim the existing space from both ends of the string and gives the following output.

Robert

POSITION Function

The POSITION function is used to return the position of a substring inside the string. The position of the first occurrence of the string is returned only.

Syntax

Example

The following example will return the occurrence of "e" in the "Robert" string.

After executing the above code, it will find the position of "e" substring in the "Robert" string as output.

4

SUBSTRING Function

The SUBSTRING function is used to return a specified number of characters from a particular position of a given string. SUBSTRING function is ANSI standard.

Syntax

It returns a string (str), starting at the position (pos), and length (len) in characters.

Example

The following example returns the character from the 1st position for 3 characters.

The above code returns 3 characters from the 1st position of the string "Robert" as the output.

Rob

Teradata Date/Time Functions

Date/Time functions operate on either Date/Time or Interval values and provide a Date/Time value as a result.

The supported Date/Time functions are:

- CURRENT_DATE

- CURRENT_TIME

- CURRENT_TIMESTAMP

- EXTRACT

To avoid any synchronization problems, operations among these functions are guaranteed to use identical definitions for DATE, TIME, or TIMESTAMP, therefore following services are always valid:

- CURRENT_DATE = CURRENT_DATE

- CURRENT_TIME = CURRENT_TIME

- CURRENT_TIMESTAMP = CURRENT_TIMESTAMP

- CURRENT_DATE and CURRENT_TIMESTAMP always identify the same DATE

- CURRENT_TIME and CURRENT_TIMESTAMP always identify the same TIME

The values reflect the time when the request starts and does not change during the application's duration.

Date Storage

Dates are stored as integer internally using the following formula.

To check how the dates are stored using the following query.

Since the dates are stored as an integer, we can perform some arithmetic operations on them.

Teradata supports most of the standards date functions. Some of the commonly used date functions are listed below, such as:

| Date Function | Explanation |

|---|---|

| LAST_DAY | It returns the last day of the given month. It may contain the timestamp values as well. |

| NEXT_DAY | It returns the date of the weekday that follows a particular date. |

| MONTHS_BETWEEN | It returns the number of months between two date (timestamp) values. The result is always an integer value. |

| ADD_MONTHS | It adds a month to the given date (timestamp) value and return resulting date value. |

| OADD_MONTHS | It adds a month to the given date (timestamp) value and return resulting date value. |

| TO_DATE | It converts a string value to a DATE value and returns the resulting date value. |

| TO_TIMESTAMP | It converts a string value to a TIMESTAMP value and returns resulting timestamp value. |

| TRUNC | It returns a DATE value with the time portion truncated to the unit specified by a format string. |

| ROUND | It returns a DATE value with the time portion rounded to the unit specified by a format string. |

| NUMTODSINTERVAL | It converts a numeric value to interval days to seconds. |

| NUMTOYMINTERVAL | It converts a numeric value to interval years to the month. |

| TO_DSINTERVAL | It converts a string value to interval days to second. |

| TO_YMINTERVAL | It converts a string value to interval year to a month. |

| EXTRACT | It extracts portions of the day, month, and year from a given date value. |

| INTERVAL | INTERVAL function is used to perform arithmetic operations on DATE and TIME values. |

EXTRACT

EXTRACT function is used to extract portions of the day, month, and year from a DATE value. This function is also used to extract hour, minute, and second from TIME/TIMESTAMP value.

Examples

1. The following example shows how to extract Year value from Date and Timestamp values.

Output

2020

2. The following example shows how to extract Month values from Date and Timestamp values.

Output

3. The following example shows how to extract Day values from Date and Timestamp values.

Output

22

4. The following example shows how to extract Hour values from Date and Timestamp values.

Output

6

5. The following example shows how to extract Minute values from Date and Timestamp values.

Output

46

6. The following example shows how to extract the Second values from Date and Timestamp values.

Output

25.150000

INTERVAL

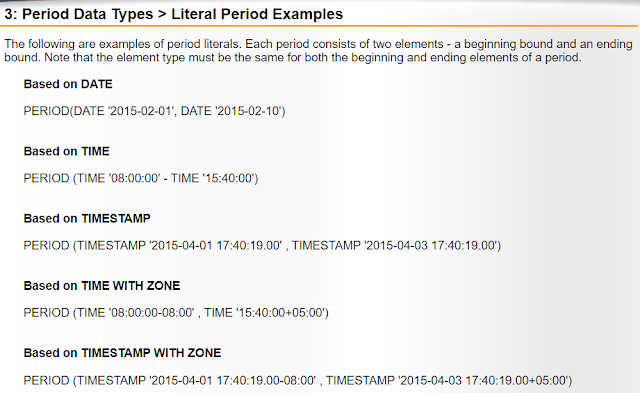

Teradata provides INTERVAL function to perform arithmetic operations on DATE and TIME values. There are two types of INTERVAL functions, such as:

1. Year-Month Interval

- YEAR

- YEAR TO MONTH

- MONTH

2. Day-Time Interval

- DAY

- DAY TO HOUR

- DAY TO MINUTE

- DAY TO SECOND

- HOUR

- HOUR TO MINUTE

- HOUR TO SECOND

- MINUTE

- MINUTE TO SECOND

- SECOND

Examples

1. The following example adds 4 years to the current date.

Output

05/22/2024

2. The following example adds 4 years and 03 months to the current date.

Output

08/22/2024

3. The following example adds 03 days, 05 hours, and 10 minutes to the current timestamp.

Output

05-25-2020 10:07:25.150000+00.00

Teradata Built-In Functions

Teradata provides built-in functions, which are extensions to SQL. The Built-in functions return information about the system.

Built-in functions are sometimes referred to as individual registers. It can be used anywhere that a literal can appear.

If a SELECT statement that contains a built-in function references a table name, then the result of the query contains one row for every row of the table that satisfies the search condition.

Some common built-in functions are listed below with examples, such as:

| S. No. | Function | Example |

|---|---|---|

| 1 | SELECT DATE; | Date- 2018/02/10 |

| 2 | SELECT CURRENT_DATE; | Date- 2020/05/23 |

| 3 | SELECT TIME; | Time- 09:02:00 |

| 4 | SELECT CURRENT_TIME; | Time- 10:01:13 |

| 5 | SELECT CURRENT_TIMESTAMP; | Current TimeStamp(6)- 2020-05-23 10:01:13.990000+00.00 |

| 6 | SELECT DATABASE; | Database- TDUSER |

DISTINCT Option

The DISTINCT option specifies that duplicate values which are not to be used when an expression is processed.

The following SELECT returns the number of unique job titles in a table.

Output

2000 1000 00 3000

A query can have multiple aggregate functions that use DISTINCT with the same expression, such as:

A query can also have multiple aggregate functions that use DISTINCT with different expressions, such as:

Teradata CASE & COALESCE

CASE and COALESCE both functions are used in Teradata for different purposes. Both functions have different functionalities.

CASE Expression

Teradata CASE statement provides the flexibility to fetch alternate values for a column base on the condition specified in the expression.

CASE expression evaluates each row against a condition or WHEN clause and returns the result of the first match if there are no matches, then the result from the ELSE part of the return.

Syntax

Following is the syntax of the CASE expression.

Example

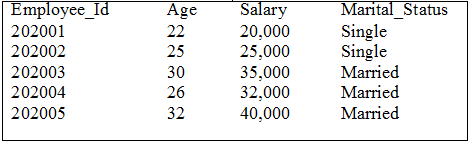

Consider the following Employee record in the below table.

| Employee_Id | Age | Salary | Marital_Status |

|---|---|---|---|

| 202001 | 22 | 20,000 | 1 |

| 202002 | 25 | 25,000 | 1 |

| 202003 | 30 | 35,000 | 2 |

| 202004 | 26 | 32,000 | 2 |

| 202005 | 32 | 40,000 | 2 |

In the above example, we evaluate the Marital_Status column. It returns 1 if the marital status is Single and returns 2 if the marital status is married. Otherwise, it returns the value as Not Sure.

Now, we will apply the CASE statement on Marital_Status column as follows:

After executing the above code, it produces the following output.

The above CASE expression can also be written in the following way, which will produce the same result as above.

COALESCE Expression

Teradata COALESCE is used for NULL handling. The COALESCE is a statement that returns the first non-null value of the expression. It returns NULL if all the arguments of the expression evaluate to NULL. Following is the syntax.

Syntax

Here is the basic syntax of the COALESCE function:

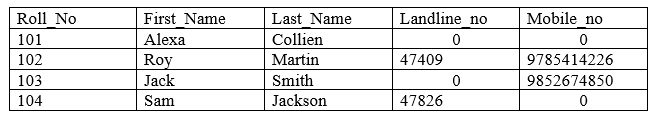

Example

Consider the following Student table.

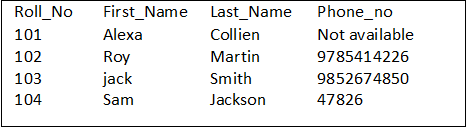

Now we can prioritize which phone number to select using COALESCE function as follows:

In the above example, we will search for Landline_no first. If that is NULL, it will search for Mobile_no, respectively. If both the numbers are NULL, it will return not available. And if none of the argument is returning, not NULL value, it will return the default value from those columns.

When we execute the above query, it generates the following output.

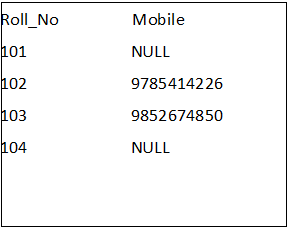

NULLIF

The NULLIF statement returns NULL if the arguments are equal.

Syntax

Following is the syntax of the NULLIF statement.

Example

The following example returns NULL if the Mobile_No is equal to 0. Otherwise, it returns the Mobile_No value.

The above query returns the following records. We can see that Roll_No 101 and 104 have Mobile as NULL.

Teradata Primary Index

The primary index is used to specify where the data resides in Teradata. It is used to determine which AMP gets the data row.

In Teradata, each table is required to have a primary index defined. If the primary index is not defined, Teradata automatically assigns the primary index.

The primary index provides the fastest way to access the data. A primary may have a maximum of 64 columns. The primary index is defined while creating a table, and it cannot be altered or modified.

The primary index is the most preferred and essential index for:

- Data distribution

- Known access path

- Improves join performance

Rules for Primary Index

Here are some specific rules for the Primary index, such as:

Rule 1: One Primary index per table.

Rule 2: A Primary index value can unique or non-unique.

Rule 3: The Primary index value can be NULL.

Rule 4: The Primary index of a populated table cannot be modified.

Rule 5: the Primary index value can be modified.

Rule 6: A Primary index has a limit of 64 columns.

Types of Primary Index

There are two types of Primary Indexes.

- Unique Primary Index (UPI)

- Non-Unique Primary Index (NUPI)

1. Unique Primary Index (UPI)

In the Unique Primary Index table, the column should not have any duplicate values. If any duplicate values are inserted, they will be rejected. The Unique Primary index enforces uniqueness for a column.

A Unique Primary Index (UPI) will always spread the rows of the table evenly amongst the AMPs. UPI access is always a one-AMP operation.

How to create a Unique Primary Index?

In the following example, we create the Student table with columns Roll_no, First_name, and Last_name.

| Roll_no | First_name | Last_name |

|---|---|---|

| 1001 | Mike | Richard |

| 1002 | Robert | Williams |

| 1003 | Peter | Collin |

| 1004 | Alexa | Stuart |

| 1005 | Robert | Peterson |

We have selected Roll_no to be our Primary Index. Because we have designated Roll_no as a Unique Primary Index, there can be no duplicates of student roll numbers in the table.

2. Non-Unique Primary Index (NUPI)

A Non-Unique Primary Index (NUPI) means that the values for the selected column can be non-unique.

A Non-Unique Primary Index will never spread the table rows evenly. An All-AMP operation will take longer if the data is unevenly distributed.

We might pick a NUPI over a UPI because the NUPI column may be more useful for query access and joins.

How to Create a Non-Unique Primary Index?

In the following example, we create the employee table with columns Employee_Id, Name, Department, and City.

| Employee_Id | Name | Department | City |

|---|---|---|---|

| 202001 | Max | Sales | London |

| 202002 | Erika | Finance | Washington |

| 202003 | Nancy | Management | Paris |

| 202004 | Bella | Human Recourse | London |

| 202005 | Sam | Marketing | London |

Every employee has a different employee Id, name, and department, but many employees belong to the same city in this table. Therefore we have selected City to be our Non-Unique Primary Index.

Multi-Column Primary Indexes

Teradata allows more than one column to be designated as the Primary Index. It is still only one Primary Index, but it is merely made up of combining multiple columns.

Teradata Multi-column Primary index allows up to 64 combined columns to make up the one Primary Index required for a table.

Example

In the following example, we have designated First_Name, and Last_Name combined to make up the Primary Index.

This is very useful and beneficial for two reasons:

- To get better data distribution among the AMPs.

- And for users who often use multiple keys consistently to query.

Data distribution using Primary Index

When a user submits an SQL request against a table using a Primary Index, the request becomes a one-AMP operation, which is the most direct and efficient way for the system to find a row. The process is explained below.

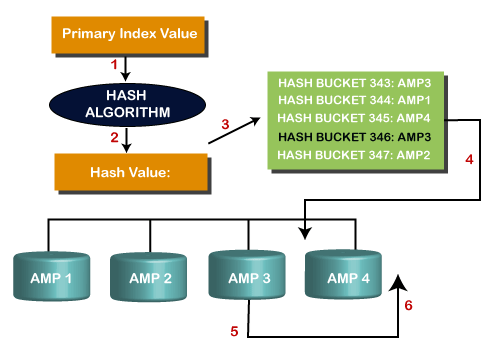

The complete process is explained in the below image:

Hashing Process

The process of Hashing is defined in the following steps, such as:

Step 1: In the first step, the Primary index value goes into the hashing algorithm.

Step 2: The output of the hashing algorithm is the row hash value.

Step 3: The hash map points to the specific AMP where the row resides.

Step 4: The PE sends the request directly to the identified AMP.

Step 5: The AMP locates the row(s) on its vdisk.

Step 6: The data is sent to PE through BYNET, and PE sends the answer set to the client application.

Duplicate Row Hash Values

The hashing algorithm can end up with the same row hash value for two different rows.

We can do this in two ways, such as:

- Duplicate NUPI values: If a Non-Unique Primary Index is used, duplicate NUPI values will produce the same row hash value.

- Hash synonym: It is also called a hash collision. It occurs when the hashing algorithm calculates an identical row hash value for two different Primary Index values.

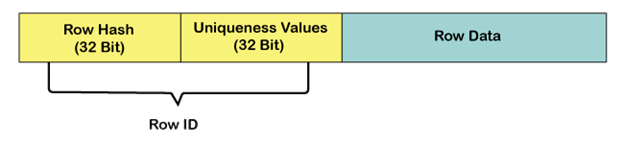

To differentiate each row in a table, every row is assigned a unique Row ID. The Row ID is the combination of the row hash value and unique value.

The uniqueness value is used to differentiate between rows whose Primary Index values generate identical row hash values. In most cases, only the row hash value portion of the Row ID is needed to locate the row.

When each row is inserted, the AMP adds the row ID, stored as a prefix of the row.

The first row inserted with a particular row hash value is assigned a unique value of the unique value is incremented by 1 for any additional rows inserted with the same row hash value.

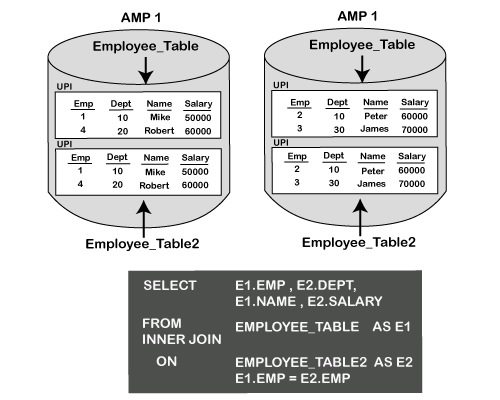

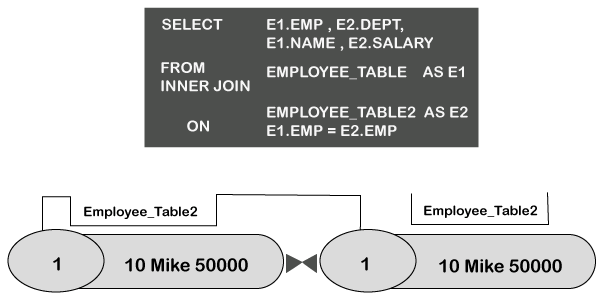

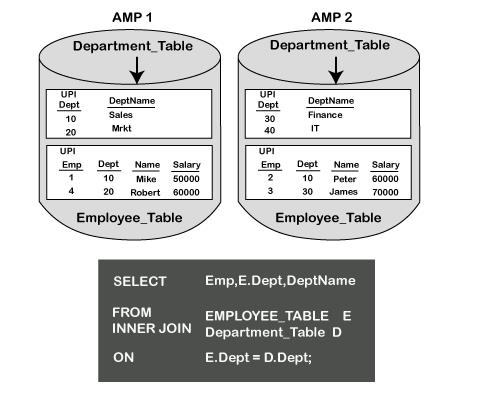

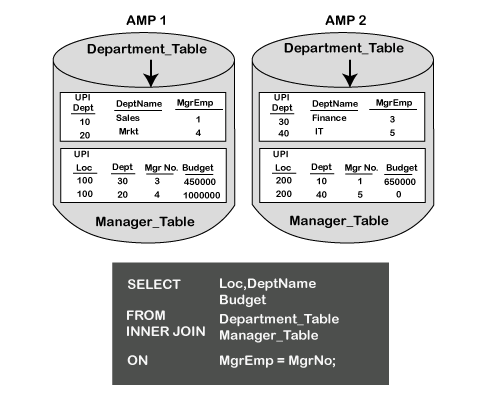

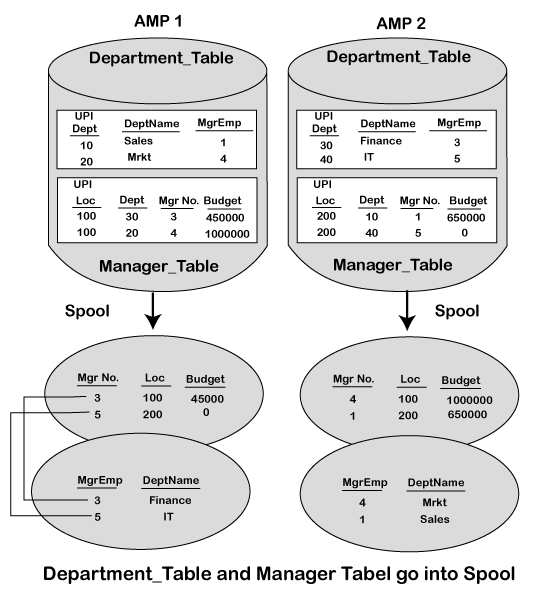

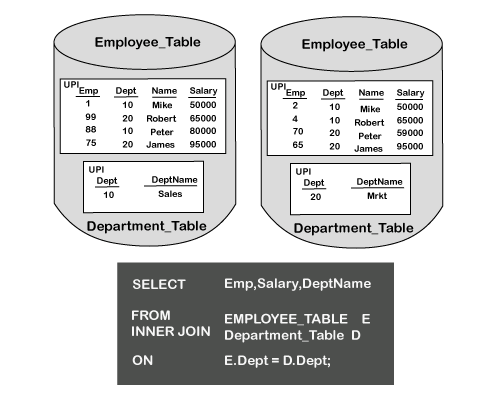

Teradata Joins

Join is used to combine records from more than one table. Tables are joined based on the common columns and values from these tables.

There are different types of Joins available in Teradata.

- Inner Join

- Left Outer Join

- Right Outer Join

- Full Outer Join

- Self Join

- Cross Join

- Cartesian Production Join

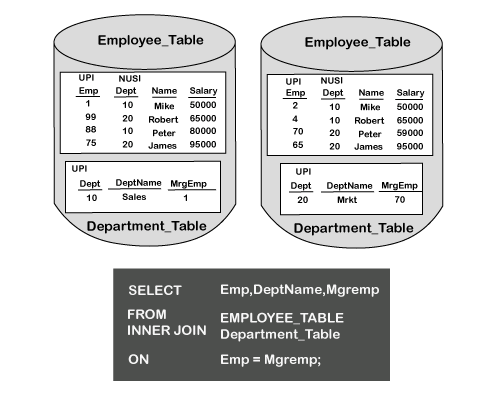

INNER JOIN

Inner Join combines records from multiple tables and returns the values that exist in both the tables.

Syntax

Following is the syntax of the INNER JOIN statement.

Example

Consider the following two tables, such as the student table and attendance table.

Student Table:

| RollNo | FirstName | LastName | BirthDate |

|---|---|---|---|

| 1001 | Mike | Richard | 1/2/1996 |

| 1002 | Robert | Williams | 3/5/1995 |

| 1003 | Peter | Collin | 4/1/1994 |

| 1004 | Alexa | Stuart | 11/6/1995 |

| 1005 | Robert | Peterson | 12/1/1997 |

Attendance Table:

| RollNo | present | Absent | % |

|---|---|---|---|

| 1001 | 200 | 20 | 90% |

| 1002 | 160 | 60 | 72% |

| 1003 | 150 | 70 | 68% |

| 1004 | 210 | 10 | 95% |

The following query joins the student table and attendance table on the common column Rollno. Each table is assigned an alias A & B, and the columns are referenced with the correct alias.

When the above query is executed, it returns the following records. Rollno 1005 is not included in the result since it doesn't have matching records in the attendance table.

OUTER JOIN

LEFT OUTER JOIN and RIGHT OUTER JOIN also combine the results from multiple tables.

- LEFT OUTER JOIN:It returns all the records from the left table and returns only the matching records from the right table.

- RIGHT OUTER JOIN:It returns all the records from the right table and returns only matching rows from the left table.

- FULL OUTER JOIN:It combines the results from both LEFT OUTER and RIGHT OUTER JOINS. It returns both matching and non-matching rows from the joined tables.

Syntax

Following is the syntax of the OUTER JOIN statement. We need to use one of the options from LEFT OUTER JOIN, RIGHT OUTER JOIN, or FULL OUTER JOIN.

Example

Consider the following example of the LEFT OUTER JOIN query. It returns all the records from the Student table and matching records from the attendance table.

When the above query is executed, it produces the following output. For student 1005, % value is NULL, since it doesn't have matching records in the attendance table.

CROSS JOIN

Cross Join joins every row from the left table to every row from the right table.

Cross Join is a Teradata specified join, which is equivalent to the product join. There will not be an "ON" keyword in the Cross Join.

Syntax

Following is the syntax of the CROSS JOIN statement.

Example

Consider the following example of the CROSS JOIN query.

When the above query is executed, it produces the following output. RollNo 1001 from the Student table is joined with each and every record from Attendance Table.

Rollno Rollno % 1001 1001 90% 1001 1002 72% 1001 1003 68% 1001 1004 95%

Teradata Substring

The Teradata SUBSTRING or SUBSTR function is one of the Teradata string functions, and it is used to cut a substring from a string based on its position.

SUBSTR or SUBSTRING will work the same in Teradata. But the syntax may be different.

We use ANSI syntax for Teradata SUBSTRING and Teradata syntax for Teradata SUBSTR. The ANSI syntax is designed to compatible with other database systems.

Syntax

Or

Argument Types and Rules

SUBSTRING and SUBSTR operate on the following types of arguments:

- Character

- Byte

- Numeric

If the string_expression argument is numeric, then User-defined type (UDT) are implicitly cast to any of the following predefined types:

- Character

- Numeric

- Byte

- DATE

To define an implicit cast for a UDT, we use the CREATE CAST statement and specify the AS ASSIGNMENT clause.

Implicit type conversion of UDTs for system operators and functions, including SUBSTRING and SUBSTR, is a Teradata extension to the ANSI SQL standard. To disable this extension, set the DisableUDTImplCastForSysFuncOp field of the DBS Control Record to TRUE.

Result Type and Attributes

Here are the default result type and attributes for SUBSTR and SUBSTRING, such as:

If the string argument is a:

- BLOB, then the result type is BLOB(n).

- Byte string other than BLOB, the result type is VARBYTE(n).

- CLOB, then the result type is CLOB(n).

- Numeric or character string other than CLOB, the result type is VARCHAR(n).

In ANSI mode, the value of n for the resulting BLOB(n), VARBYTE(n), CLOB(n), or VARCHAR(n) is the same as the original string.

In Teradata mode, the value of n for the result type depends on the number of characters or bytes in the resulting string. To get the data type of the resulting string, we can use the TYPE function.

Difference between SUBSTRING and SUBSTR

The SUBSTR function is the original Teradata substring operation. It is written to be compatible with DB/2.

- It can be used in the SELECT list to return any portion of the character that data stored in a column to a client or in the WHERE clause.

- When we use the SUBSTR function, such as SUBSTRING, the name of the column needs to be provided along with the starting character location and the length or number of characters to return.

- The main difference is that commas are used as delimiters between these three parameters instead of FROM and FOR.

The SUBSTRING function length is optional. When it is not included, all remaining characters to the end of the column are returned. In the earlier releases of Teradata, the SUBSTR was much more restrictive in the values allowed. This situation increased the chances of the SQL statement failing due to unexpected data or costs.

- Both SUBSTRING and SUBSTR allow for partial character data strings to be returned, even in ANSI mode. These functions only store the requested data in a spool, not the entire column. Therefore, the amount of spool space required can be reduced or tuned using the substring functions.

- The SUBSTR is more compatible and tolerant regarding the parameter values passed to them, like the newer SUBSTRING.

- The SUBSTRING is the ANSI standard, and therefore, it is the better choice between these two functions.

Teradata Table Types

Teradata supports the following types of tables to hold temporary data.

| S. No. | Table Types | Description |

|---|---|---|

| 1 | ANSI Temporal | ANSI-compliant support for temporal tables. Using temporal tables, Teradata Database can process statements and queries that include time-based reasoning. Temporal tables record both system time (the time when the information was recorded in the database) and valid time (when the information is in effect or correct in a real-world application). |

| 2 | Derived | A derived table is a type of temporary table obtained from one or more other tables as the result of a SubQuery. It is specified in an SQL SELECT statement. It avoids the need to use the CREATE and DROP TABLE statements for storing retrieved information. It is useful when we are more sophisticated and complex query codes. |

| 3 | Error Logging | Error logging tables store the information about errors on an associated permanent table. And it also stores Log information about insert and updates errors. |

| 4 | Global Temporary | Global temporary tables are private to the session. It is dropped automatically at the end of a session. It has a persistent table definition stored in the Data Dictionary. The saved description may be shared by multiple users and sessions, with each session getting its instance of the table. |

| 5 | Global Temporary Trace | Global temporary trace tables are store trace output for the length of the session. It has a persistent table definition stored in the Data Dictionary. It is useful for debugging SQL stored procedures (via a call to an external stored procedure written to the trace output) and external routines (UDFs, UDMs, and external stored procedures). |

| 6 | NoPI | NoPI tables are permanent tables that do not have primary indexes defined on them. They provide a performance advantage when used as staging tables to load data from FastLoad or TPump Array INSERT. They can have secondary indexes defined on them to avoid full-table scans during row access. |

| 7 | Permanent | Permanent tables allow different sessions and users to share table content. |

| 8 | Queue | Queue tables are permanent tables with a timestamp column. The timestamp indicates when each row was inserted into the table. It is established first-in-first-out (FIFO) ordering of table contents, which is needed for customer applications requiring event processing. |

| 9 | Volatile | Volatile tables are used only when

|

Derived Table

Derived tables are created, used, and dropped within a query. These are used to store intermediate results within a query.

Example

Consider the following employee record in the form of two tables.

Emp Table:

| Employee_Id | First_Name | Last_Name | Department_No |

|---|---|---|---|

| 202001 | Mike | Richard | 1 |

| 202002 | Robert | Williams | 2 |

| 202003 | Peter | Collin | 2 |

| 202004 | Alexa | Stuart | 1 |

| 202005 | Robert | Peterson | 1 |

Salary Table:

| Employee_Id | First_Name | Last_Name | Department_No |

|---|---|---|---|

| 202001 | 40,000 | 4,000 | 36,000 |

| 202002 | 80,000 | 6,000 | 74,000 |

| 202003 | 90,000 | 7,000 | 83,000 |

| 202004 | 75,000 | 5,000 | 70,000 |

| 202005 | 80,000 | 00 | 80,000 |

The following query creates a derived table EmpSal with records of employees with a salary higher than 80,000.

When the above query is executed, it returns the employee's records with a salary higher than or equal to 80,000.

Employee_Id First_Name NetPay 202003 Peter 83,000 202005 Robert 80,000

Volatile Table

Volatile tables are created and dropped within a user session. Their definition is not stored in the data dictionary. They hold intermediate data of the query, which is frequently used.

Syntax

Example

In the following example, we create a VOLATILE table that name is dept_stat.

After executing the above code, it returns the min, avg, and max salary according to the departments in the output.

Dept_no avg_salary max_salary min_salary 1 186,000 80,000 36,000 2 157,000 83,000 74,000

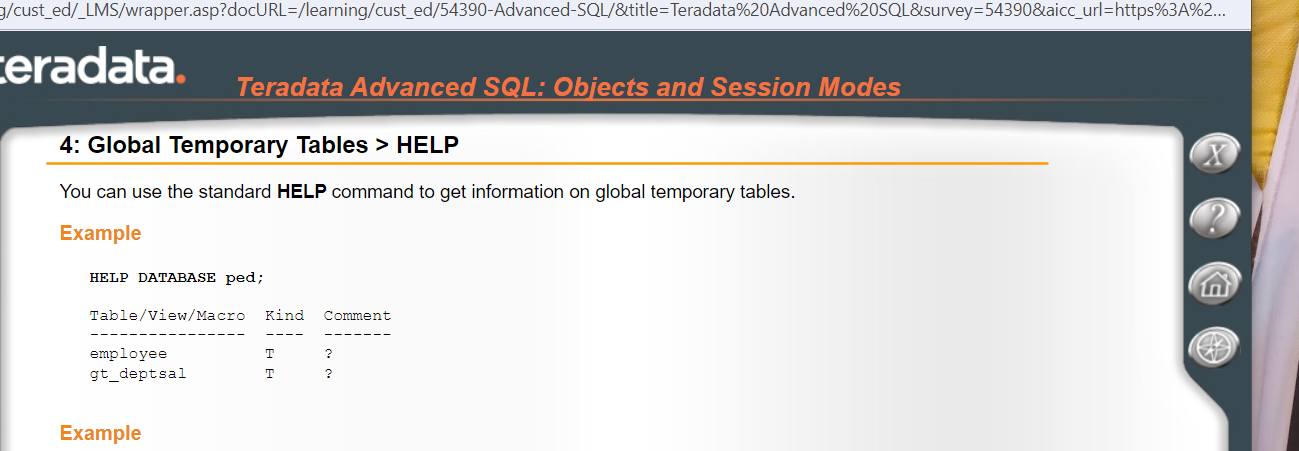

Global Temporary Table

The definition of the Global Temporary table is stored in the data dictionary, and they can be used by many users and sessions. But the data loaded into a global temporary table is retained only during the session.

We can materialize up to 2000 global temporary tables per session.

Syntax

Following is the syntax of the Global Temporary table.

Example

Below query creates the Global Temporary table, such as:

When the above query is executed, it returns the following output.

A table has been created.

Teradata Space Concepts

Teradata is designed to reduce the DBA's administrative functions when it comes to space management. Space concepts are configured in the following ways in the Teradata system.

- Permanent Space

- Spool Space

- Temporary Space

Permanent Space (Perm Space)

Permanent space is the maximum amount of space available for the user and database to hold data rows. Permanent tables, journals, fallback tables, and secondary index sub-tables use permanent space.

Permanent space is not pre-allocated for the database and user. The amount of permanent space is divided by the number of AMPs. Whenever per AMP limit exceeds, an error message is generated.

Equal distribution is necessary because there is a high percentage that the objects will be shared across all the AMPs, and at the time of data retrieval, all AMPs will work parallel to fetch the data.

Unlike other relational databases, the Teradata database does not physically define the Perm space at the time of object creation. Instead of that, it represents the upper limit for the Perm space, and then Perm space is used dynamically by the objects.

Example

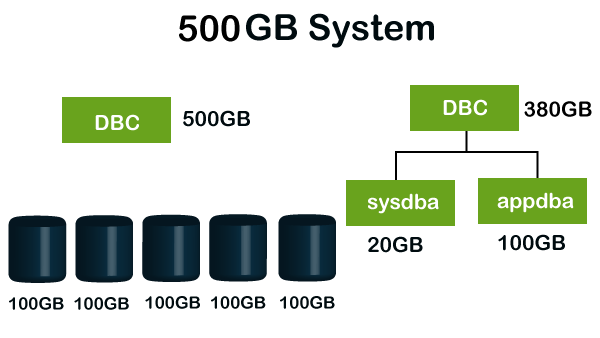

Suppose we have a Teradata system having 500 GB Perm space. Initially, DBC owns 100% of the perm space of a new system. As in Teradata, everything is parallel. Perm space will be distributed evenly across all AMPs.

Teradata system having 500GB perm space and 5 AMPs, each AMP will get 100GB of perm space to execute a query in parallel if DBC creates two users as sysdba and appdba with 20GB and 100GB perm space respectively.

Space will be taken from parent i.e., DBC. So now DBC has 280GB of perm space. This mechanism ensures enough memory to execute all processes in the Teradata system.

Spool Space

Spool space is the amount of space on the system that has not been allocated. It is used by the system to keep the intermediate results of the SQL query. Users without spool space cannot execute any query.

When executing a conditional query, all the qualifying rows which satisfy the given condition will be store in the Spool space for further processing by the query. Any Perm space currently unassigned is available as a Spool space.

Spool space defines the maximum amount of space the user can use. Establishing a Spool space limit is not required when Users and Databases are created. But it is highly recommended to define the upper limit of Spool space for any object.

Example

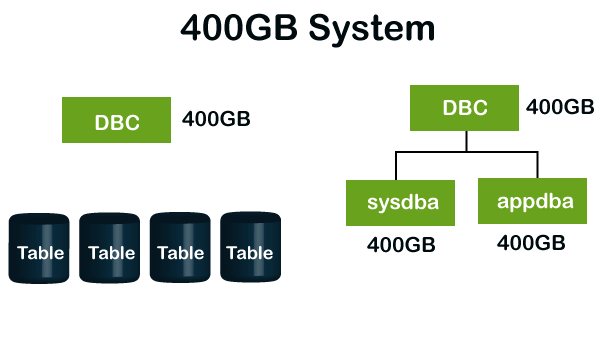

Suppose a 10GB permanent space has been allocated to a database. However, the actual perm space occupied by tables took up 50% of perm space i.e., 5GB.

The remaining 5GB space is available system-wide for a spool. Spool space for the child will not be subtracted from its immediate parent. And the child database spool space can be equal as its parent. Spool space for sysdba and appdba is the same as DBC.

Temp Space

Temp space is the unused permanent space that is used by Global Temporary tables. Temp space is also divided by the number of AMPs.

Unused Perm space is used as Temp space for storing the table and the data in it. If we are specifying some value for Temp space, it should not exceed the value of the parent database and user.

If we do not specify a value, then the maximum value is inherited from that of the parent.

Teradata Statistics

Teradata optimizer gives an execution strategy for every SQL query. This execution strategy is based on the statistics collected on the tables used within the SQL query. Statistics on the table are collected using COLLECT STATISTICS command.

The COLLECT STATISTICS (Optimizer form) statement collects demographic data for one or more columns of a base table, hash index, or join index, computes a statistical profile of the collected data, and stores the synopsis in the Data Dictionary.

The Optimizer uses the synopsis data when it generates its table access and joins plans.

Environment Information

Teradata Statistics environment needs the following:

- Number of Nodes, AMPs, and CPUs

- Amount of memory

Data Demographics

Data Demographics consider the following:

- Number of rows

- Row size

- Range of values in the table

- Number of rows per value

- Number of Nulls

Usage

We should collect statistics on newly created, empty data tables. An empty collection defines the columns, indexes, and synoptic data structure for loaded groups.

We can easily collect statistics again after the table is populated for prototyping, and back when it is in production.

We can collect statistics in the following ways.

- A unique index, which can be:

- Primary or secondary

- Single or multiple columns

- Partitioned or non-partitioned

- A non-unique index, which can be:

- Primary or secondary

- Single or multiple columns

- Partitioned or non-partitioned

- With or without COMPRESS fields

- A non-indexed column or set of columns, which can be:

- Partitioned or non-partitioned

- With or without COMPRESS fields

- A temporary table

- If we specify the TEMPORARY keyword but a materialized table does not exist, the system first materializes an instance based on the specified column names and indexes.

This means that after a valid instance is created, we can re-collect statistics on the columns by entering COLLECT STATISTICS and the TEMPORARY keyword without having to specify the desired columns and index. - If we omit the TEMPORARY keyword, but the table is temporary, statistics are collected for an empty base table rather than the materialized instance.

- If we specify the TEMPORARY keyword but a materialized table does not exist, the system first materializes an instance based on the specified column names and indexes.

- Sample (system-selected percentage) of the rows of a data table or index, to detect data skew and dynamically increase the sample size when found.

- The system does not store both sampled and defined statistics for the same index or column set. Once sampled statistics have been collected, implicit recollection hits the same columns and indexes and operates in the same mode.

- Join index

- Hash index

- NoPI table

How to approach Collect Statistics

There are three approaches to collect statistics on the table.

- Random AMP Sampling

- Full statistics collection

- Using the SAMPLE option

Collecting Statistics on Table

COLLECT STATISTICS command is used to collect statistics on a table.

Syntax

Following is the basic syntax to collect statistics on a table.

Example

Consider an Employee table with the following records, such as:

| Emp_Id | First_Name | Last_Name | Department_No |

|---|---|---|---|

| 202001 | Mike | Richard | 1 |

| 202002 | Robert | Williams | 2 |

| 202003 | Peter | Collin | 2 |

| 202004 | Alexa | Stuart | 1 |

| 202005 | Robert | Peterson | 1 |

We are going to run the following query to collect statistics for the Emp_Id, First_Name columns of the Employee table.

When the above query is executed, it produces the following output.

Update completed. 2 rows changed.

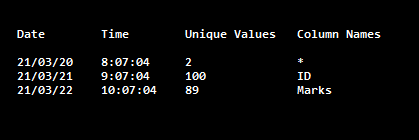

Viewing Statistics

We can view the collected statistics using the HELP STATISTICS command.

Syntax

Following is the syntax to view the statistics collected.

Example

Following is an example to view the statistics collected on the Employee table.

When the above query is executed, it produces the following table with updated columns and their values.

Date Time Unique Values Column Names 6/2/20 10:05:02 5 * 6/2/20 10:05:02 5 Emp_Id, First_Name

Teradata Compression

Compression reduces the physical size of the stored information. The goal of compression is to represent information accurately using the fewest number of bits.

The Compression methods are either logical or physical. Physical data compression re-encodes information independently of its meaning, and logical data compression substitutes one set of data with another, more compact set.

In Teradata, compression can compress up to 255 distinct values, including NULL. Since the storage is reduced, Teradata can store more records in a block. This results in improved query response time since any input operation can process more rows per block.

Compression can be added at table creation using CREATE TABLE or after table creation using ALTER TABLE command.

Compression has the following essential reasons, such as:

- To reduce storage costs.

- To enhance system performance.

Compression reduces storage costs by storing more logical data per unit of physical capacity. Compression produces smaller rows, resulting in more rows stored per data block and fewer data blocks.

Compression enhances system performance because there is less physical data to retrieve per row for queries. And compressed data remains compressed while in memory, the FSG cache can hold more rows, reducing the size of disk input.

Rules

Teradata Compression method has the following rules to compress stored data, such as:

- Only 255 values can be compressed per column.

- The Primary Index column cannot be compressed.

- Volatile tables cannot be compressed.

Types of Compression

Teradata Database uses several types of compression.

| Database element | Explanation |

|---|---|

| Column values | The storage of those values one time only in the table header, not in the row itself, and pointing to them using an array of presence bits in the row header. It applies to:

|

| Hash and Join indexes | A logical row compression in which multiple sets of non-repeating column values are appended to a single set of repeating column values. This allows the system to store the repeating value set only once, while any non-repeating column values are stored as logical segmental extensions of the base repeating set. |

| Data blocks | The storage of primary table data, or join or hash index subtable data. |

| Partition containers | The auto-compression method set determined by Teradata Database to apply to a container of a column-partitioned table or join index when we have not specified the NO AUTO COMPRESS option at the time the object was created. |

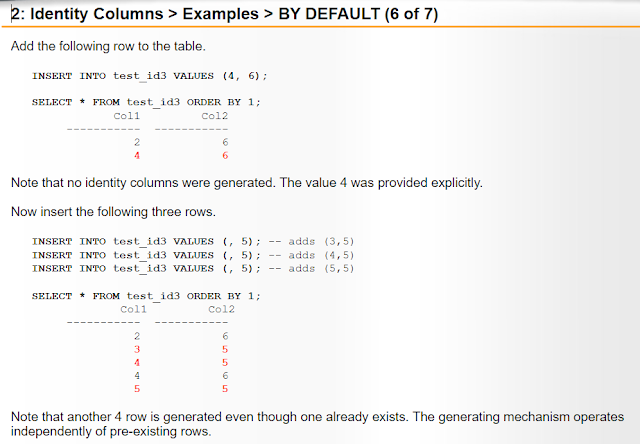

Multi-Value Compression (MVC)

Multi-value compression (MVC) compresses repeating values in a column when we specify the value in a compression list in the column definition.