starburst

https://docs.starburst.io/introduction/architecture.html

Starburst Enterprise platform (SEP) is a fast, interactive distributed SQL query engine that decouples compute from data storage. SEP lets you query data where it lives, including Hive, Snowflake, MySQL or even proprietary data stores.

NOT A DB, IS FEDERATED QUERY ENGINE.

is tool that enable fast and secure access to data across different source.

With Starburst as a single point of access and Immuta as a single point of access control, data teams can optimize performance and streamline self-service data access from a centralized access control plane.Built on trino.

Trino (formerly Presto® SQL) is the fastest open source, massively parallel processing SQL query engine designed for analytics of large datasets distributed over one or more data sources in object storage, databases and other systems.

A query engine is a system designed to receive queries, process them, and return results to the users.

An object storage system is a data source that stores data in files that live in a directory structure, rather than a relational database. Some common examples of object storage systems include Amazon S3 and Azure Data Lake Storage (ADLS).

architecture

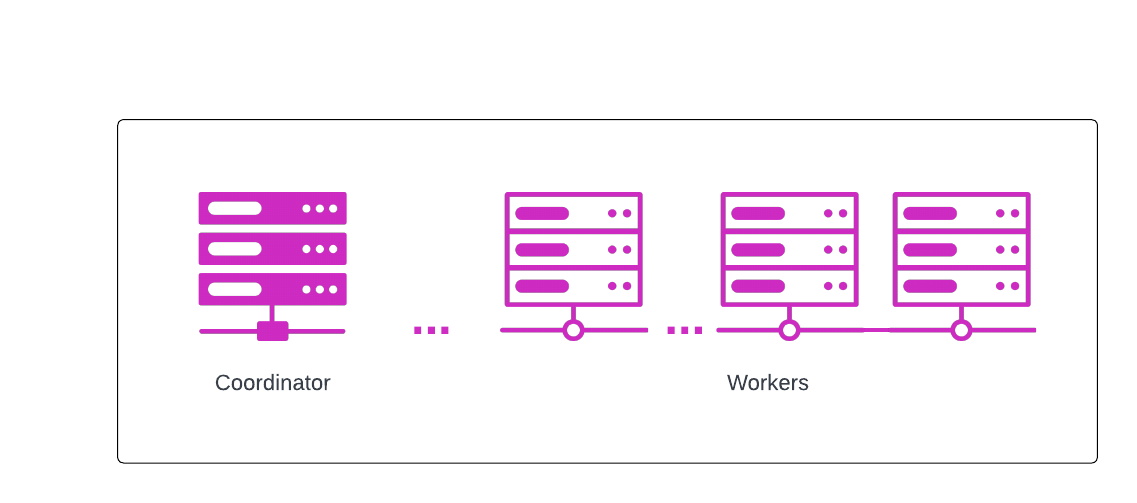

At their heart, Starburst Enterprise and Starburst Galaxy are massively parallel processing (MPP) compute clusters running the distributed SQL query engine, Trino.

A cluster sits between a user’s favorite SQL client, and the existing data sources that they want to query. Data sources are represented by catalogs, and those catalogs specify the data source connectivity that the cluster needs to query the data sources. With Starburst products, you can query any connected data source.

A Trino cluster has two node types:

- Coordinator - a single server that handles incoming queries, and provides query parsing and analysis, scheduling and planning. Distributes processing to worker nodes.

- Workers - servers that execute tasks as directed by the coordinator, including retrieving data from the data source and processing data.

A single server process is run on each node in the cluster; only one node is designated as the coordinator. Worker nodes allow Starburst products to scale horizontally; more worker nodes means more processing resources. You can also scale up by increasing the size of the worker nodes,

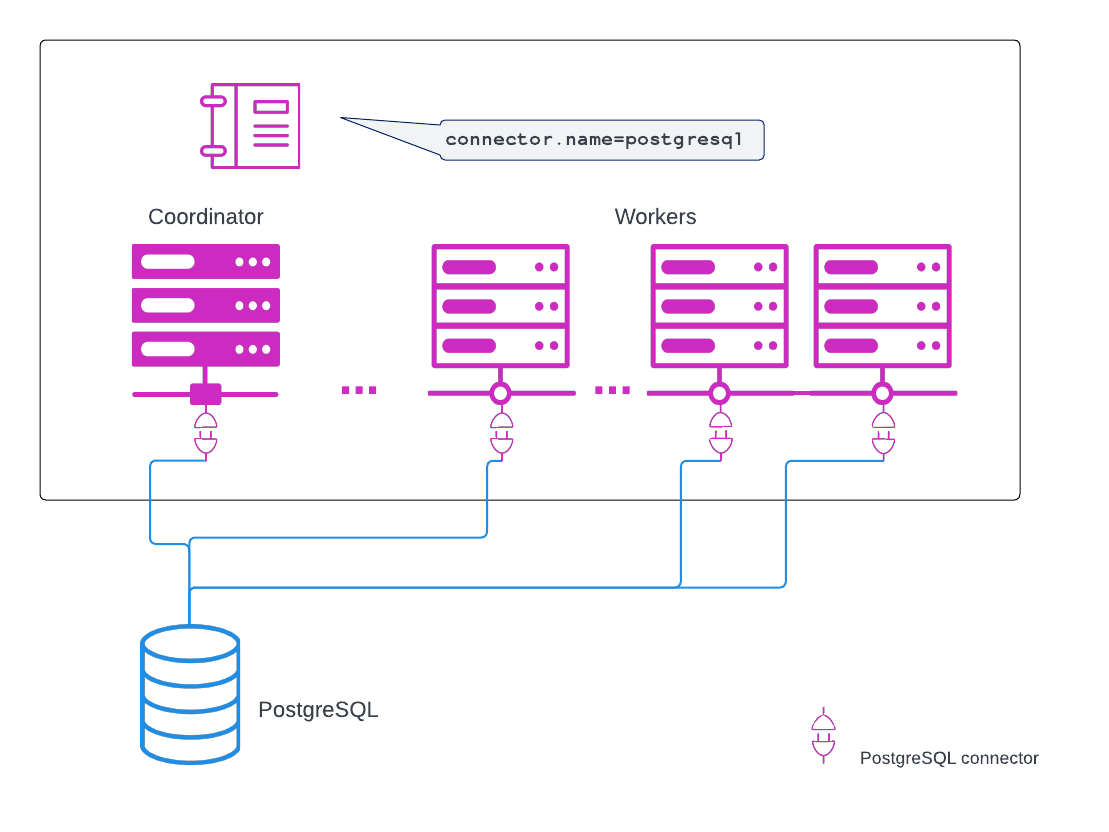

Rounding out Trino-based architecture is our suite of connectors. Connectors are what allow Starburst products to separate compute from storage. The configuration necessary to access a data source is called a catalog. Each catalog is configured with the connector for that particular data source. A connector is called when a catalog that is configured to use the connector is used in a query. Data source connections are established based on catalog configuration. The following example shows how this works with PostgreSQL, however, all RDBMS data sources are similarly configured:

Catalogs #

A catalog is the configuration that enables access to a specific data sources. Every cluster can have numerous catalogs configured, and therefore allow access to many data sources.

List all configured and available catalogs with the SQL statement SHOW CATALOGS (Galaxy / SEP ) in the Trino CLI or any other client:

SHOW CATALOGS;

Catalog

---------

hive_sales

mysql_crm

(2 rows)

The query editor and other client tools also display a list of catalogs.

Connectors #

A connector is specific to the data source it supports. It transforms the underlying data into the SQL concepts of schemas, tables, columns, rows, and data types.

Connectors provide the following between a data source and Starburst Enterprise or Starburst Galaxy:

- Secure communications link

- Translation of data types

- Handling of variances in the SQL implementation, adaption to a provided API, or translation of data in raw files

Every catalog uses a specific connector. Connectors are built-in features.

In SEP, you must specify a connector to create a catalog. In Starburst Galaxy, you create a catalog and the connector selection and configuration is handled for you.

Schemas #

Every catalog includes one or more schemas. They group together objects. Schemas are often equivalent to a specific database or schema in the underlying data source.

List all available schema in a specific catalog with the SQL statement SHOW SCHEMAS (Galaxy / SEP ) in the Trino CLI or any other client:

SHOW SCHEMA FROM examplecatalog;

Objects #

Every schema includes one or more objects. Typically these objects are tables.

List all available tables in a specific schema with the SQL statement SHOW TABLES (Galaxy / SEP ) in the Trino CLI or any other client:

SHOW TABLES FROM examplecatalog.exampleschema;

Some catalogs also support views and materialized views as objects.

More information about a table is available with the SQL statement SHOW COLUMNS (Galaxy / SEP ):

SHOW COLUMNS FROM examplecatalog.exampleschema.exampletable;

This information includes the columns in the table, the data type of the columns and other information.

Context #

The default context for any SQL statement is the catalog level. As a result any query to access a table needs to specific the catalog, schema and table establishing a fully-qualified name.

SELECT * FROM <catalog>.<schema>.<object>

This allows identical table names in the underlying data sources to be addressed specifically. The following to queries access tables of the same name in completely separate data sources:

SELECT * FROM sales.apac.customer;

SELECT * FROM marketing.americas.users;Querying from multiple catalogs #

Starburst Enterprise and Starburst Galaxy let data consumers query anything, anywhere, and get the data they need in a single query. Specifically, they support queries that combine data from many different data sources at the same time.

Fully-qualified object names are critical when querying from multiple sources:

SELECT * FROM <catalog>.<schema>.<object>;

Here’s an example of data from two different sources, Hive and MySQL, combined into a single query:

SELECT

sfm.account_number

FROM

hive_sales.order_entries.orders oeo

JOIN

mysql_crm.sf_history.customer_master sfm

ON sfm.account_number = oeo.customer_id

WHERE sfm.sf_industry = `medical` AND oeo.order_total > 300

LIMIT 2;

This query uses data from the following sources:

- The

orderstable in theorder_entriesschema, which is defined in thehive_salescatalog - The

customer_mastertable in thesf_historyschema, which is defined in themysql_crmcatalog

Data lakes #

Modern businesses analyze and consume tremendous amounts of data, and modern data architecture has evolved to meet these business needs. Traditional data warehousing architectures struggle to keep up with the rate that businesses need to ingest and consume data.

A data lake is an architecture that allows your data to live in whatever format, software, or geographic region it currently resides in. This frees your data from vendor lock-in and removes the need for lengthy ETL processes that slow your business’s time-to-insight.

Architecture #

The modern data lake incorporates data transformation and governance in front of the lake, and is best described as a data lakehouse. The following diagram describes an example of data lakehouse architecture:

In this architecture, your data lives in whatever software, format, and location it needs in order to be most cost-effective on the lake. On top of this data storage layer, the lakehouse incorporates a data transformation layer that employs governance, materialized views, and other technologies and rules to ensure that the resulting data is ready for consumption.

The optimized data is then ready to be accessed by clients and BI tools through a query engine which handles data-level security, view management, and query optimization regardless of where in the lake the underlying data is stored.

Starburst for the data lake #

Starburst Galaxy and Starburst Enterprise platform (SEP) are ideal tools to get the most value out of your data lakehouse, with features to support scaling, optionality, high performance, and ease of data consumption.

Scaling and optionality #

Starburst products are designed to work in parallel with your data lakehouse, not lock your data into a restrictive, vendor-compliant architecture that increases costs and holds back your operational growth. Starburst products accomplish this with the following features:

- Flexibility to access a wide variety of data sources.

- Standard JDBC/ODBC drivers that allow connections to Starburst from many client and BI tools.

- Cloud-friendly cluster architecture that separates computational resources from storage, allowing for flexible growth in whatever way best suits your operational needs.

- Multi-cluster deployment options to handle cross-region workloads.

High performance #

Starburst products include a high-performing query engine out of the box, with the following features that support the most efficient use of your data lakehouse:

- Query planning that distributes workloads across cluster resources, and pushing optimizations down to data sources to improve both processing speeds and network utilization.

- Options to materialize data on the lake for high-speed access to data independent of performance on the data source.

- High-performance I/O with multiple parallel connections between Starburst cluster nodes and object storage.

- Object store caching and indexing to dynamically accelerate access to your lakehouse data.

Ease of consumption #

Starburst products are a central access point between your data consumers and your data lakehouse, streamlining access to the data most relevant to your users with the following features:

- Centralized access control role and attribute-based access control (RBAC/ABAC) systems per-product that dictate persona-based access to your data.

- Data product management that adds a semantic layer to your data for simplified consumption and sharing.

- Support for modern BI tools and clients, allowing for organization-wide sharing and utilization of data insights.

Object storage fundamentals #

Rather than a traditional relational database, data in object storage systems is stored in a series of files that live in nested directories. These files can then be accessed with a query language using data warehouse systems like Hive. Some examples of object storage systems include the following:

- Apache Hadoop Distributed File System (HDFS)

- Amazon S3

- Azure Data Lake Storage (ADLS)

- Google Cloud Storage (GCS)

- S3-compatible systems such as MinIO

The data files typically use one of the following supported binary formats:

- ORC

- Parquet

- AVRO

Security

There are three main types of security measures for Starburst’s Trino-based clusters:

- User authentication and client security

- Security inside the cluster

Security between the cluster and data sources

Data transfers between the coordinator and workers inside the cluster use REST-based interactions over HTTP/HTTPS. Data transfers between clients and the cluster, and between the cluster and data sources, can also communicate securely via TLS and through REST-based APIs.

User authentication and client security #

Trino-based clusters allow you to authenticate users as they connect through their favorite client.

Clients communicate only with the cluster coordinator via a REST-like API, which can be configured to use TLS.

Authentication #

User authentication in Starburst Galaxy clusters is handled exclusively through the platform itself, which is managed in the Starburst Galaxy UI.

In Starburst Enterprise, authentication can be handled using one or more of the following authentication types:

- LDAP

- OAuth2

- Okta

- Salesforce

- Password files

- Certificates

- JWT

Kerberos

How does this work? #

Data platforms in your organization such as Snowflake, Postgres, and Hive are defined by data engineers as catalogs. Catalogs, in turn, define schemas and their tables. Depending on the data access controls in place, discovering what data catalogs are available to you across all of your data platforms can be easy! Even through a CLI, it’s a single, simple query to get you started with your federated data:

trino> SHOW CATALOGS;

Catalog

---------

hive_sales

mysql_crm

(2 rows)After that, you can easily explore schemas in a catalog with the familiar SHOW SCHEMAS command:

trino> SHOW SCHEMAS FROM hive_sales LIKE `%rder%`;

Schema

---------

order_entries

customer_orders

(2 rows)From there, you can of course see the tables you might want to query:

trino> SHOW TABLES FROM order_entries;

Table

-------

orders

order_items

(2 rows)You might notice that even though you know from experience that some of your data is in MySQL and others in Hive, they all show up in the unified SHOW CATALOGS results. From here, you can simply join the data sources from different platforms as if they were from different tables. You just need to use their fully qualified names:

trino> SELECT

sfm.account_number

FROM

hive_sales.order_entries.orders oeo

JOIN

mysql_crm.sf_history.customer_master sfm

ON sfm.account_number = oeo.customer_id

WHERE sfm.sf_industry = `medical` AND oeo.order_total > 300

LIMIT 2;How do I get started? #

To begin, get the latest Starburst JDBC or ODBC driver and get it installed. Note that even though you very likely already have a JDBC or ODBC driver installed for your work, you do need the Starburst-specific driver. Be careful not to install either in the same directory with other JDBC or ODBC drivers.

If your data ops group has not already given you the required connection information, reach out to them for the following:

- the JDBC URL -

jdbc:trino://example.net:8080 - whether your org is using SSL to connect

- the type of authentication your org is using - username or LDAP

When you have that info and your driver is installed, you are ready to connect.

What kind of tools can I use? #

More than likely, you can use all your client tools, and even ones on your wishlist with the the help of the Clients section of our documentation.

CALL

Call a procedure using positional arguments:

Call a procedure using named arguments:

Call a procedure using a fully qualified name:

CALL catalog.schema.test();DEALLOCATE PREPARE#

Synopsis#

Description#

Removes a statement with the name statement_name from the list of prepared statements in a session.

Examples#

Deallocate a statement with the name my_query:

DEALLOCATE PREPARE my_query;DESCRIBE INPUT#

Synopsis#

Description#

Lists the input parameters of a prepared statement along with the position and type of each parameter. Parameter types that cannot be determined will appear as unknown.

Examples#

Prepare and describe a query with three parameters:

Prepare and describe a query with no parameters:

DESCRIBE OUTPUT#

Synopsis#

Description#

List the output columns of a prepared statement, including the column name (or alias), catalog, schema, table, type, type size in bytes, and a boolean indicating if the column is aliased.

List the output columns of a prepared statement, including the column name (or alias), catalog, schema, table, type, type size in bytes, and a boolean indicating if the column is aliased.

Examples#

Prepare and describe a query with four output columns:

Column Name | Catalog | Schema | Table | Type | Type Size | Aliased

-------------+---------+--------+--------+---------+-----------+---------

nationkey | tpch | sf1 | nation | bigint | 8 | false

name | tpch | sf1 | nation | varchar | 0 | false

regionkey | tpch | sf1 | nation | bigint | 8 | false

comment | tpch | sf1 | nation | varchar | 0 | false

(4 rows)

Prepare and describe a query with four output columns:

Column Name | Catalog | Schema | Table | Type | Type Size | Aliased

-------------+---------+--------+--------+---------+-----------+---------

nationkey | tpch | sf1 | nation | bigint | 8 | false

name | tpch | sf1 | nation | varchar | 0 | false

regionkey | tpch | sf1 | nation | bigint | 8 | false

comment | tpch | sf1 | nation | varchar | 0 | false

(4 rows)

EXECUTE#

Synopsis#

Description#

Executes a prepared statement with the name statement_name. Parameter values are defined in the USING clause.

Executes a prepared statement with the name statement_name. Parameter values are defined in the USING clause.

Examples#

Prepare and execute a query with no parameters:

Prepare and execute a query with two parameters:

This is equivalent to:

Prepare and execute a query with no parameters:

Prepare and execute a query with two parameters:

This is equivalent to:

EXECUTE IMMEDIATE#

Synopsis#

Description#

Executes a statement without the need to prepare or deallocate the statement. Parameter values are defined in the USING clause.

Executes a statement without the need to prepare or deallocate the statement. Parameter values are defined in the USING clause.

Examples#

Execute a query with no parameters:

Execute a query with two parameters:

This is equivalent to:

Execute a query with no parameters:

Execute a query with two parameters:

This is equivalent to:

EXPLAIN#

Synopsis#

where option can be one of:

where option can be one of:

Description#

Show the logical or distributed execution plan of a statement, or validate the statement. The distributed plan is shown by default. Each plan fragment of the distributed plan is executed by a single or multiple Trino nodes. Fragments separation represent the data exchange between Trino nodes. Fragment type specifies how the fragment is executed by Trino nodes and how the data is distributed between fragments:

SINGLEFragment is executed on a single node.

HASHFragment is executed on a fixed number of nodes with the input data distributed using a hash function.

ROUND_ROBINFragment is executed on a fixed number of nodes with the input data distributed in a round-robin fashion.

BROADCASTFragment is executed on a fixed number of nodes with the input data broadcasted to all nodes.

SOURCEFragment is executed on nodes where input splits are accessed.

Show the logical or distributed execution plan of a statement, or validate the statement. The distributed plan is shown by default. Each plan fragment of the distributed plan is executed by a single or multiple Trino nodes. Fragments separation represent the data exchange between Trino nodes. Fragment type specifies how the fragment is executed by Trino nodes and how the data is distributed between fragments:

SINGLEFragment is executed on a single node.

HASHFragment is executed on a fixed number of nodes with the input data distributed using a hash function.

ROUND_ROBINFragment is executed on a fixed number of nodes with the input data distributed in a round-robin fashion.

BROADCASTFragment is executed on a fixed number of nodes with the input data broadcasted to all nodes.

SOURCEFragment is executed on nodes where input splits are accessed.

Examples#

EXPLAIN (TYPE LOGICAL)#

Process the supplied query statement and create a logical plan in text format:

Process the supplied query statement and create a logical plan in text format:

PREPARE#

Synopsis#

Description#

Prepares a statement for execution at a later time. Prepared statements are queries that are saved in a session with a given name. The statement can include parameters in place of literals to be replaced at execution time. Parameters are represented by question marks.

Examples#

Prepare a select query:

Prepare a select query that includes parameters. The values to compare with regionkey and nationkey will be filled in with the EXECUTE statement:

Prepare an insert query:

SHOW FUNCTIONS#

Synopsis#

Description#

List all the functions available for use in queries. For each function returned, the following information is displayed:

Function name

Return type

Argument types

Function type

Deterministic

Description

Specify a pattern in the optional LIKE clause to filter the results to the desired subset. For example, the following query allows you to find functions beginning with array:

List all the functions available for use in queries. For each function returned, the following information is displayed:

Function name

Return type

Argument types

Function type

Deterministic

Description

Specify a pattern in the optional LIKE clause to filter the results to the desired subset. For example, the following query allows you to find functions beginning with array:

USE#

Synopsis#

Description#

Update the session to use the specified catalog and schema. If a catalog is not specified, the schema is resolved relative to the current catalog.

Examples#

USE hive.finance;

USE information_schema;VALUES#

Synopsis#

where row is a single expression or

Description#

Defines a literal inline table.

VALUES can be used anywhere a query can be used (e.g., the FROM clause of a SELECT, an INSERT, or even at the top level). VALUES creates an anonymous table without column names, but the table and columns can be named using an AS clause with column aliases.

Examples#

Return a table with one column and three rows:

Return a table with two columns and three rows:

Return table with column id and name:

Create a new table with column id and name:

SELECT#

Synopsis#

[ WITH [ RECURSIVE ] with_query [, ...] ]

SELECT [ ALL | DISTINCT ] select_expression [, ...]

[ FROM from_item [, ...] ]

[ WHERE condition ]

[ GROUP BY [ ALL | DISTINCT ] grouping_element [, ...] ]

[ HAVING condition]

[ WINDOW window_definition_list]

[ { UNION | INTERSECT | EXCEPT } [ ALL | DISTINCT ] select ]

[ ORDER BY expression [ ASC | DESC ] [, ...] ]

[ OFFSET count [ ROW | ROWS ] ]

[ LIMIT { count | ALL } ]

[ FETCH { FIRST | NEXT } [ count ] { ROW | ROWS } { ONLY | WITH TIES } ]

where from_item is one of

For detailed description of MATCH_RECOGNIZE clause, see pattern recognition in FROM clause.

For description of table functions usage, see table functions.

and join_type is one of

and grouping_element is one of

[ WITH [ RECURSIVE ] with_query [, ...] ]

SELECT [ ALL | DISTINCT ] select_expression [, ...]

[ FROM from_item [, ...] ]

[ WHERE condition ]

[ GROUP BY [ ALL | DISTINCT ] grouping_element [, ...] ]

[ HAVING condition]

[ WINDOW window_definition_list]

[ { UNION | INTERSECT | EXCEPT } [ ALL | DISTINCT ] select ]

[ ORDER BY expression [ ASC | DESC ] [, ...] ]

[ OFFSET count [ ROW | ROWS ] ]

[ LIMIT { count | ALL } ]

[ FETCH { FIRST | NEXT } [ count ] { ROW | ROWS } { ONLY | WITH TIES } ]

where from_item is one of

For detailed description of MATCH_RECOGNIZE clause, see pattern recognition in FROM clause.

For description of table functions usage, see table functions.

and join_type is one of

and grouping_element is one of

Description#

Retrieve rows from zero or more tables.

Retrieve rows from zero or more tables.

WITH clause#

The WITH clause defines named relations for use within a query. It allows flattening nested queries or simplifying subqueries. This also works with multiple subqueries:

Additionally, the relations within a WITH clause can chain:

Warning

Currently, the SQL for the WITH clause will be inlined anywhere the named relation is used. This means that if the relation is used more than once and the query is non-deterministic, the results may be different each time.

The WITH clause defines named relations for use within a query. It allows flattening nested queries or simplifying subqueries. This also works with multiple subqueries:

Additionally, the relations within a WITH clause can chain:

Warning

Currently, the SQL for the WITH clause will be inlined anywhere the named relation is used. This means that if the relation is used more than once and the query is non-deterministic, the results may be different each time.

WITH RECURSIVE clause#

The WITH RECURSIVE clause is a variant of the WITH clause. It defines a list of queries to process, including recursive processing of suitable queries.

Warning

This feature is experimental only. Proceed to use it only if you understand potential query failures and the impact of the recursion processing on your workload.

A recursive WITH-query must be shaped as a UNION of two relations. The first relation is called the recursion base, and the second relation is called the recursion step. Trino supports recursive WITH-queries with a single recursive reference to a WITH-query from within the query. The name T of the query T can be mentioned once in the FROM clause of the recursion step relation.

The following listing shows a simple example, that displays a commonly used form of a single query in the list:

In the preceding query the simple assignment VALUES (1) defines the recursion base relation. SELECT n + 1 FROM t WHERE n < 4 defines the recursion step relation. The recursion processing performs these steps:

recursive base yields 1

first recursion yields 1 + 1 = 2

second recursion uses the result from the first and adds one: 2 + 1 = 3

third recursion uses the result from the second and adds one again: 3 + 1 = 4

fourth recursion aborts since n = 4

this results in t having values 1, 2, 3 and 4

the final statement performs the sum operation of these elements with the final result value 10

The types of the returned columns are those of the base relation. Therefore it is required that types in the step relation can be coerced to base relation types.

The RECURSIVE clause applies to all queries in the WITH list, but not all of them must be recursive. If a WITH-query is not shaped according to the rules mentioned above or it does not contain a recursive reference, it is processed like a regular WITH-query. Column aliases are mandatory for all the queries in the recursive WITH list.

The following limitations apply as a result of following the SQL standard and due to implementation choices, in addition to WITH clause limitations:

only single-element recursive cycles are supported. Like in regular WITH-queries, references to previous queries in the WITH list are allowed. References to following queries are forbidden.

usage of outer joins, set operations, limit clause, and others is not always allowed in the step relation

recursion depth is fixed, defaults to 10, and doesn’t depend on the actual query results

You can adjust the recursion depth with the session property max_recursion_depth. When changing the value consider that the size of the query plan growth is quadratic with the recursion depth.

The WITH RECURSIVE clause is a variant of the WITH clause. It defines a list of queries to process, including recursive processing of suitable queries.

Warning

This feature is experimental only. Proceed to use it only if you understand potential query failures and the impact of the recursion processing on your workload.

A recursive WITH-query must be shaped as a UNION of two relations. The first relation is called the recursion base, and the second relation is called the recursion step. Trino supports recursive WITH-queries with a single recursive reference to a WITH-query from within the query. The name T of the query T can be mentioned once in the FROM clause of the recursion step relation.

The following listing shows a simple example, that displays a commonly used form of a single query in the list:

In the preceding query the simple assignment VALUES (1) defines the recursion base relation. SELECT n + 1 FROM t WHERE n < 4 defines the recursion step relation. The recursion processing performs these steps:

recursive base yields

1first recursion yields

1 + 1 = 2second recursion uses the result from the first and adds one:

2 + 1 = 3third recursion uses the result from the second and adds one again:

3 + 1 = 4fourth recursion aborts since

n = 4this results in

thaving values1,2,3and4the final statement performs the sum operation of these elements with the final result value

10

The types of the returned columns are those of the base relation. Therefore it is required that types in the step relation can be coerced to base relation types.

The RECURSIVE clause applies to all queries in the WITH list, but not all of them must be recursive. If a WITH-query is not shaped according to the rules mentioned above or it does not contain a recursive reference, it is processed like a regular WITH-query. Column aliases are mandatory for all the queries in the recursive WITH list.

The following limitations apply as a result of following the SQL standard and due to implementation choices, in addition to WITH clause limitations:

only single-element recursive cycles are supported. Like in regular

WITH-queries, references to previous queries in theWITHlist are allowed. References to following queries are forbidden.usage of outer joins, set operations, limit clause, and others is not always allowed in the step relation

recursion depth is fixed, defaults to

10, and doesn’t depend on the actual query results

You can adjust the recursion depth with the session property max_recursion_depth. When changing the value consider that the size of the query plan growth is quadratic with the recursion depth.

If column aliases are specified, they override any preexisting column or row field names:

Otherwise, the existing names are used:

and in their absence, anonymous columns are produced:

Otherwise, the existing names are used:

and in their absence, anonymous columns are produced:

GROUP BY clause#

The GROUP BY clause divides the output of a SELECT statement into groups of rows containing matching values. A simple GROUP BY clause may contain any expression composed of input columns or it may be an ordinal number selecting an output column by position (starting at one).

The following queries are equivalent. They both group the output by the nationkey input column with the first query using the ordinal position of the output column and the second query using the input column name:

GROUP BY clauses can group output by input column names not appearing in the output of a select statement. For example, the following query generates row counts for the customer table using the input column mktsegment:

When a GROUP BY clause is used in a SELECT statement all output expressions must be either aggregate functions or columns present in the GROUP BY clause.

The GROUP BY clause divides the output of a SELECT statement into groups of rows containing matching values. A simple GROUP BY clause may contain any expression composed of input columns or it may be an ordinal number selecting an output column by position (starting at one).

The following queries are equivalent. They both group the output by the nationkey input column with the first query using the ordinal position of the output column and the second query using the input column name:

GROUP BY clauses can group output by input column names not appearing in the output of a select statement. For example, the following query generates row counts for the customer table using the input column mktsegment:

When a GROUP BY clause is used in a SELECT statement all output expressions must be either aggregate functions or columns present in the GROUP BY clause.

Complex grouping operations#

Trino also supports complex aggregations using the GROUPING SETS, CUBE and ROLLUP syntax. This syntax allows users to perform analysis that requires aggregation on multiple sets of columns in a single query. Complex grouping operations do not support grouping on expressions composed of input columns. Only column names are allowed.

Complex grouping operations are often equivalent to a UNION ALL of simple GROUP BY expressions, as shown in the following examples. This equivalence does not apply, however, when the source of data for the aggregation is non-deterministic.

Trino also supports complex aggregations using the GROUPING SETS, CUBE and ROLLUP syntax. This syntax allows users to perform analysis that requires aggregation on multiple sets of columns in a single query. Complex grouping operations do not support grouping on expressions composed of input columns. Only column names are allowed.

Complex grouping operations are often equivalent to a UNION ALL of simple GROUP BY expressions, as shown in the following examples. This equivalence does not apply, however, when the source of data for the aggregation is non-deterministic.

GROUPING SETS#

Grouping sets allow users to specify multiple lists of columns to group on. The columns not part of a given sublist of grouping columns are set to NULL.

origin_state | origin_zip | destination_state | destination_zip | package_weight

--------------+------------+-------------------+-----------------+----------------

California | 94131 | New Jersey | 8648 | 13

California | 94131 | New Jersey | 8540 | 42

New Jersey | 7081 | Connecticut | 6708 | 225

California | 90210 | Connecticut | 6927 | 1337

California | 94131 | Colorado | 80302 | 5

New York | 10002 | New Jersey | 8540 | 3

(6 rows)

GROUPING SETS semantics are demonstrated by this example query:

origin_state | origin_zip | destination_state | _col0

--------------+------------+-------------------+-------

New Jersey | NULL | NULL | 225

California | NULL | NULL | 1397

New York | NULL | NULL | 3

California | 90210 | NULL | 1337

California | 94131 | NULL | 60

New Jersey | 7081 | NULL | 225

New York | 10002 | NULL | 3

NULL | NULL | Colorado | 5

NULL | NULL | New Jersey | 58

NULL | NULL | Connecticut | 1562

(10 rows)

The preceding query may be considered logically equivalent to a UNION ALL of multiple GROUP BY queries:

SELECT origin_state, NULL, NULL, sum(package_weight)

FROM shipping GROUP BY origin_state

UNION ALL

SELECT origin_state, origin_zip, NULL, sum(package_weight)

FROM shipping GROUP BY origin_state, origin_zip

UNION ALL

SELECT NULL, NULL, destination_state, sum(package_weight)

FROM shipping GROUP BY destination_state;

However, the query with the complex grouping syntax (GROUPING SETS, CUBE or ROLLUP) will only read from the underlying data source once, while the query with the UNION ALL reads the underlying data three times. This is why queries with a UNION ALL may produce inconsistent results when the data source is not deterministic.

Grouping sets allow users to specify multiple lists of columns to group on. The columns not part of a given sublist of grouping columns are set to NULL.

origin_state | origin_zip | destination_state | destination_zip | package_weight

--------------+------------+-------------------+-----------------+----------------

California | 94131 | New Jersey | 8648 | 13

California | 94131 | New Jersey | 8540 | 42

New Jersey | 7081 | Connecticut | 6708 | 225

California | 90210 | Connecticut | 6927 | 1337

California | 94131 | Colorado | 80302 | 5

New York | 10002 | New Jersey | 8540 | 3

(6 rows)

GROUPING SETS semantics are demonstrated by this example query:

origin_state | origin_zip | destination_state | _col0

--------------+------------+-------------------+-------

New Jersey | NULL | NULL | 225

California | NULL | NULL | 1397

New York | NULL | NULL | 3

California | 90210 | NULL | 1337

California | 94131 | NULL | 60

New Jersey | 7081 | NULL | 225

New York | 10002 | NULL | 3

NULL | NULL | Colorado | 5

NULL | NULL | New Jersey | 58

NULL | NULL | Connecticut | 1562

(10 rows)

The preceding query may be considered logically equivalent to a UNION ALL of multiple GROUP BY queries:

SELECT origin_state, NULL, NULL, sum(package_weight)

FROM shipping GROUP BY origin_state

UNION ALL

SELECT origin_state, origin_zip, NULL, sum(package_weight)

FROM shipping GROUP BY origin_state, origin_zip

UNION ALL

SELECT NULL, NULL, destination_state, sum(package_weight)

FROM shipping GROUP BY destination_state;

However, the query with the complex grouping syntax (GROUPING SETS, CUBE or ROLLUP) will only read from the underlying data source once, while the query with the UNION ALL reads the underlying data three times. This is why queries with a UNION ALL may produce inconsistent results when the data source is not deterministic.

CUBE#

The CUBE operator generates all possible grouping sets (i.e. a power set) for a given set of columns. For example, the query:

is equivalent to:

origin_state | destination_state | _col0

--------------+-------------------+-------

California | New Jersey | 55

California | Colorado | 5

New York | New Jersey | 3

New Jersey | Connecticut | 225

California | Connecticut | 1337

California | NULL | 1397

New York | NULL | 3

New Jersey | NULL | 225

NULL | New Jersey | 58

NULL | Connecticut | 1562

NULL | Colorado | 5

NULL | NULL | 1625

(12 rows)

The CUBE operator generates all possible grouping sets (i.e. a power set) for a given set of columns. For example, the query:

is equivalent to:

origin_state | destination_state | _col0

--------------+-------------------+-------

California | New Jersey | 55

California | Colorado | 5

New York | New Jersey | 3

New Jersey | Connecticut | 225

California | Connecticut | 1337

California | NULL | 1397

New York | NULL | 3

New Jersey | NULL | 225

NULL | New Jersey | 58

NULL | Connecticut | 1562

NULL | Colorado | 5

NULL | NULL | 1625

(12 rows)

ROLLUP#

The ROLLUP operator generates all possible subtotals for a given set of columns. For example, the query:

is equivalent to:

The ROLLUP operator generates all possible subtotals for a given set of columns. For example, the query:

is equivalent to:

Combining multiple grouping expressions#

Multiple grouping expressions in the same query are interpreted as having cross-product semantics. For example, the following query:

which can be rewritten as:

is logically equivalent to:

origin_state | destination_state | origin_zip | _col3

--------------+-------------------+------------+-------

New York | New Jersey | 10002 | 3

California | New Jersey | 94131 | 55

New Jersey | Connecticut | 7081 | 225

California | Connecticut | 90210 | 1337

California | Colorado | 94131 | 5

New York | New Jersey | NULL | 3

New Jersey | Connecticut | NULL | 225

California | Colorado | NULL | 5

California | Connecticut | NULL | 1337

California | New Jersey | NULL | 55

(10 rows)

The ALL and DISTINCT quantifiers determine whether duplicate grouping sets each produce distinct output rows. This is particularly useful when multiple complex grouping sets are combined in the same query. For example, the following query:

is equivalent to:

SELECT origin_state, destination_state, origin_zip, sum(package_weight)

FROM shipping

GROUP BY GROUPING SETS (

(origin_state, destination_state, origin_zip),

(origin_state, origin_zip),

(origin_state, destination_state, origin_zip),

(origin_state, origin_zip),

(origin_state, destination_state),

(origin_state),

(origin_state, destination_state),

(origin_state),

(origin_state, destination_state),

(origin_state),

(destination_state),

()

);

However, if the query uses the DISTINCT quantifier for the GROUP BY:

only unique grouping sets are generated:

The default set quantifier is ALL.

Multiple grouping expressions in the same query are interpreted as having cross-product semantics. For example, the following query:

which can be rewritten as:

is logically equivalent to:

origin_state | destination_state | origin_zip | _col3

--------------+-------------------+------------+-------

New York | New Jersey | 10002 | 3

California | New Jersey | 94131 | 55

New Jersey | Connecticut | 7081 | 225

California | Connecticut | 90210 | 1337

California | Colorado | 94131 | 5

New York | New Jersey | NULL | 3

New Jersey | Connecticut | NULL | 225

California | Colorado | NULL | 5

California | Connecticut | NULL | 1337

California | New Jersey | NULL | 55

(10 rows)

The ALL and DISTINCT quantifiers determine whether duplicate grouping sets each produce distinct output rows. This is particularly useful when multiple complex grouping sets are combined in the same query. For example, the following query:

is equivalent to:

SELECT origin_state, destination_state, origin_zip, sum(package_weight)

FROM shipping

GROUP BY GROUPING SETS (

(origin_state, destination_state, origin_zip),

(origin_state, origin_zip),

(origin_state, destination_state, origin_zip),

(origin_state, origin_zip),

(origin_state, destination_state),

(origin_state),

(origin_state, destination_state),

(origin_state),

(origin_state, destination_state),

(origin_state),

(destination_state),

()

);

However, if the query uses the DISTINCT quantifier for the GROUP BY:

only unique grouping sets are generated:

The default set quantifier is ALL.

GROUPING operation#

grouping(col1, ..., colN) -> bigint

The grouping operation returns a bit set converted to decimal, indicating which columns are present in a grouping. It must be used in conjunction with GROUPING SETS, ROLLUP, CUBE or GROUP BY and its arguments must match exactly the columns referenced in the corresponding GROUPING SETS, ROLLUP, CUBE or GROUP BY clause.

To compute the resulting bit set for a particular row, bits are assigned to the argument columns with the rightmost column being the least significant bit. For a given grouping, a bit is set to 0 if the corresponding column is included in the grouping and to 1 otherwise. For example, consider the query below:

origin_state | origin_zip | destination_state | _col3 | _col4

--------------+------------+-------------------+-------+-------

California | NULL | NULL | 1397 | 3

New Jersey | NULL | NULL | 225 | 3

New York | NULL | NULL | 3 | 3

California | 94131 | NULL | 60 | 1

New Jersey | 7081 | NULL | 225 | 1

California | 90210 | NULL | 1337 | 1

New York | 10002 | NULL | 3 | 1

NULL | NULL | New Jersey | 58 | 6

NULL | NULL | Connecticut | 1562 | 6

NULL | NULL | Colorado | 5 | 6

(10 rows)

The first grouping in the above result only includes the origin_state column and excludes the origin_zip and destination_state columns. The bit set constructed for that grouping is 011 where the most significant bit represents origin_state.

grouping(col1, ..., colN) -> bigint

The grouping operation returns a bit set converted to decimal, indicating which columns are present in a grouping. It must be used in conjunction with GROUPING SETS, ROLLUP, CUBE or GROUP BY and its arguments must match exactly the columns referenced in the corresponding GROUPING SETS, ROLLUP, CUBE or GROUP BY clause.

To compute the resulting bit set for a particular row, bits are assigned to the argument columns with the rightmost column being the least significant bit. For a given grouping, a bit is set to 0 if the corresponding column is included in the grouping and to 1 otherwise. For example, consider the query below:

origin_state | origin_zip | destination_state | _col3 | _col4

--------------+------------+-------------------+-------+-------

California | NULL | NULL | 1397 | 3

New Jersey | NULL | NULL | 225 | 3

New York | NULL | NULL | 3 | 3

California | 94131 | NULL | 60 | 1

New Jersey | 7081 | NULL | 225 | 1

California | 90210 | NULL | 1337 | 1

New York | 10002 | NULL | 3 | 1

NULL | NULL | New Jersey | 58 | 6

NULL | NULL | Connecticut | 1562 | 6

NULL | NULL | Colorado | 5 | 6

(10 rows)

The first grouping in the above result only includes the origin_state column and excludes the origin_zip and destination_state columns. The bit set constructed for that grouping is 011 where the most significant bit represents origin_state.

HAVING clause#

The HAVING clause is used in conjunction with aggregate functions and the GROUP BY clause to control which groups are selected. A HAVING clause eliminates groups that do not satisfy the given conditions. HAVING filters groups after groups and aggregates are computed.

The following example queries the customer table and selects groups with an account balance greater than the specified value:

_col0 | mktsegment | nationkey | totalbal

-------+------------+-----------+----------

1272 | AUTOMOBILE | 19 | 5856939

1253 | FURNITURE | 14 | 5794887

1248 | FURNITURE | 9 | 5784628

1243 | FURNITURE | 12 | 5757371

1231 | HOUSEHOLD | 3 | 5753216

1251 | MACHINERY | 2 | 5719140

1247 | FURNITURE | 8 | 5701952

(7 rows)

The HAVING clause is used in conjunction with aggregate functions and the GROUP BY clause to control which groups are selected. A HAVING clause eliminates groups that do not satisfy the given conditions. HAVING filters groups after groups and aggregates are computed.

The following example queries the customer table and selects groups with an account balance greater than the specified value:

_col0 | mktsegment | nationkey | totalbal

-------+------------+-----------+----------

1272 | AUTOMOBILE | 19 | 5856939

1253 | FURNITURE | 14 | 5794887

1248 | FURNITURE | 9 | 5784628

1243 | FURNITURE | 12 | 5757371

1231 | HOUSEHOLD | 3 | 5753216

1251 | MACHINERY | 2 | 5719140

1247 | FURNITURE | 8 | 5701952

(7 rows)

WINDOW clause#

The WINDOW clause is used to define named window specifications. The defined named window specifications can be referred to in the SELECT and ORDER BY clauses of the enclosing query:

The window definition list of WINDOW clause can contain one or multiple named window specifications of the form

A window specification has the following components:

The existing window name, which refers to a named window specification in the WINDOW clause. The window specification associated with the referenced name is the basis of the current specification.

The partition specification, which separates the input rows into different partitions. This is analogous to how the GROUP BY clause separates rows into different groups for aggregate functions.

The ordering specification, which determines the order in which input rows will be processed by the window function.

The window frame, which specifies a sliding window of rows to be processed by the function for a given row. If the frame is not specified, it defaults to RANGE UNBOUNDED PRECEDING, which is the same as RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW. This frame contains all rows from the start of the partition up to the last peer of the current row. In the absence of ORDER BY, all rows are considered peers, so RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW is equivalent to BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING. The window frame syntax supports additional clauses for row pattern recognition. If the row pattern recognition clauses are specified, the window frame for a particular row consists of the rows matched by a pattern starting from that row. Additionally, if the frame specifies row pattern measures, they can be called over the window, similarly to window functions. For more details, see Row pattern recognition in window structures.

Each window component is optional. If a window specification does not specify window partitioning, ordering or frame, those components are obtained from the window specification referenced by the existing window name, or from another window specification in the reference chain. In case when there is no existing window name specified, or none of the referenced window specifications contains the component, the default value is used.

The WINDOW clause is used to define named window specifications. The defined named window specifications can be referred to in the SELECT and ORDER BY clauses of the enclosing query:

The window definition list of WINDOW clause can contain one or multiple named window specifications of the form

A window specification has the following components:

The existing window name, which refers to a named window specification in the

WINDOWclause. The window specification associated with the referenced name is the basis of the current specification.The partition specification, which separates the input rows into different partitions. This is analogous to how the

GROUP BYclause separates rows into different groups for aggregate functions.The ordering specification, which determines the order in which input rows will be processed by the window function.

The window frame, which specifies a sliding window of rows to be processed by the function for a given row. If the frame is not specified, it defaults to

RANGE UNBOUNDED PRECEDING, which is the same asRANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW. This frame contains all rows from the start of the partition up to the last peer of the current row. In the absence ofORDER BY, all rows are considered peers, soRANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROWis equivalent toBETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING. The window frame syntax supports additional clauses for row pattern recognition. If the row pattern recognition clauses are specified, the window frame for a particular row consists of the rows matched by a pattern starting from that row. Additionally, if the frame specifies row pattern measures, they can be called over the window, similarly to window functions. For more details, see Row pattern recognition in window structures.

Each window component is optional. If a window specification does not specify window partitioning, ordering or frame, those components are obtained from the window specification referenced by the existing window name, or from another window specification in the reference chain. In case when there is no existing window name specified, or none of the referenced window specifications contains the component, the default value is used.

Set operations#

UNION INTERSECT and EXCEPT are all set operations. These clauses are used to combine the results of more than one select statement into a single result set:

The argument ALL or DISTINCT controls which rows are included in the final result set. If the argument ALL is specified all rows are included even if the rows are identical. If the argument DISTINCT is specified only unique rows are included in the combined result set. If neither is specified, the behavior defaults to DISTINCT.

Multiple set operations are processed left to right, unless the order is explicitly specified via parentheses. Additionally, INTERSECT binds more tightly than EXCEPT and UNION. That means A UNION B INTERSECT C EXCEPT D is the same as A UNION (B INTERSECT C) EXCEPT D.

This is the order of operations:- Expressions in parentheses.

- The INTERSECT operator.

- EXCEPT (equivalent of Oracle MINUS) and UNION evaluated from left to right based on their position in the expression.

UNION INTERSECT and EXCEPT are all set operations. These clauses are used to combine the results of more than one select statement into a single result set:

The argument ALL or DISTINCT controls which rows are included in the final result set. If the argument ALL is specified all rows are included even if the rows are identical. If the argument DISTINCT is specified only unique rows are included in the combined result set. If neither is specified, the behavior defaults to DISTINCT.

Multiple set operations are processed left to right, unless the order is explicitly specified via parentheses. Additionally, INTERSECT binds more tightly than EXCEPT and UNION. That means A UNION B INTERSECT C EXCEPT D is the same as A UNION (B INTERSECT C) EXCEPT D.

- Expressions in parentheses.

- The INTERSECT operator.

- EXCEPT (equivalent of Oracle MINUS) and UNION evaluated from left to right based on their position in the expression.

UNION clause#

UNION combines all the rows that are in the result set from the first query with those that are in the result set for the second query. The following is an example of one of the simplest possible UNION clauses. It selects the value 13 and combines this result set with a second query that selects the value 42:

The following query demonstrates the difference between UNION and UNION ALL. It selects the value 13 and combines this result set with a second query that selects the values 42 and 13:

UNION combines all the rows that are in the result set from the first query with those that are in the result set for the second query. The following is an example of one of the simplest possible UNION clauses. It selects the value 13 and combines this result set with a second query that selects the value 42:

The following query demonstrates the difference between UNION and UNION ALL. It selects the value 13 and combines this result set with a second query that selects the values 42 and 13:

INTERSECT clause#

INTERSECT returns only the rows that are in the result sets of both the first and the second queries. The following is an example of one of the simplest possible INTERSECT clauses. It selects the values 13 and 42 and combines this result set with a second query that selects the value 13. Since 42 is only in the result set of the first query, it is not included in the final results.:

INTERSECT returns only the rows that are in the result sets of both the first and the second queries. The following is an example of one of the simplest possible INTERSECT clauses. It selects the values 13 and 42 and combines this result set with a second query that selects the value 13. Since 42 is only in the result set of the first query, it is not included in the final results.:

EXCEPT clause#

EXCEPT returns the rows that are in the result set of the first query, but not the second. The following is an example of one of the simplest possible EXCEPT clauses. It selects the values 13 and 42 and combines this result set with a second query that selects the value 13. Since 13 is also in the result set of the second query, it is not included in the final result.:

EXCEPT returns the rows that are in the result set of the first query, but not the second. The following is an example of one of the simplest possible EXCEPT clauses. It selects the values 13 and 42 and combines this result set with a second query that selects the value 13. Since 13 is also in the result set of the second query, it is not included in the final result.:

ORDER BY clause#

The ORDER BY clause is used to sort a result set by one or more output expressions:

Each expression may be composed of output columns, or it may be an ordinal number selecting an output column by position, starting at one. The ORDER BY clause is evaluated after any GROUP BY or HAVING clause, and before any OFFSET, LIMIT or FETCH FIRST clause. The default null ordering is NULLS LAST, regardless of the ordering direction.

Note that, following the SQL specification, an ORDER BY clause only affects the order of rows for queries that immediately contain the clause. Trino follows that specification, and drops redundant usage of the clause to avoid negative performance impacts.

In the following example, the clause only applies to the select statement.

Since tables in SQL are inherently unordered, and the ORDER BY clause in this case does not result in any difference, but negatively impacts performance of running the overall insert statement, Trino skips the sort operation.

Another example where the ORDER BY clause is redundant, and does not affect the outcome of the overall statement, is a nested query:

More background information and details can be found in a blog post about this optimization.

The ORDER BY clause is used to sort a result set by one or more output expressions:

Each expression may be composed of output columns, or it may be an ordinal number selecting an output column by position, starting at one. The ORDER BY clause is evaluated after any GROUP BY or HAVING clause, and before any OFFSET, LIMIT or FETCH FIRST clause. The default null ordering is NULLS LAST, regardless of the ordering direction.

Note that, following the SQL specification, an ORDER BY clause only affects the order of rows for queries that immediately contain the clause. Trino follows that specification, and drops redundant usage of the clause to avoid negative performance impacts.

In the following example, the clause only applies to the select statement.

Since tables in SQL are inherently unordered, and the ORDER BY clause in this case does not result in any difference, but negatively impacts performance of running the overall insert statement, Trino skips the sort operation.

Another example where the ORDER BY clause is redundant, and does not affect the outcome of the overall statement, is a nested query:

More background information and details can be found in a blog post about this optimization.

OFFSET clause#

The OFFSET clause is used to discard a number of leading rows from the result set:

If the ORDER BY clause is present, the OFFSET clause is evaluated over a sorted result set, and the set remains sorted after the leading rows are discarded:

Otherwise, it is arbitrary which rows are discarded. If the count specified in the OFFSET clause equals or exceeds the size of the result set, the final result is empty.

The OFFSET clause is used to discard a number of leading rows from the result set:

If the ORDER BY clause is present, the OFFSET clause is evaluated over a sorted result set, and the set remains sorted after the leading rows are discarded:

Otherwise, it is arbitrary which rows are discarded. If the count specified in the OFFSET clause equals or exceeds the size of the result set, the final result is empty.

LIMIT or FETCH FIRST clause#

The LIMIT or FETCH FIRST clause restricts the number of rows in the result set.

The following example queries a large table, but the LIMIT clause restricts the output to only have five rows (because the query lacks an ORDER BY, exactly which rows are returned is arbitrary):

LIMIT ALL is the same as omitting the LIMIT clause.

The FETCH FIRST clause supports either the FIRST or NEXT keywords and the ROW or ROWS keywords. These keywords are equivalent and the choice of keyword has no effect on query execution.

If the count is not specified in the FETCH FIRST clause, it defaults to 1:

If the OFFSET clause is present, the LIMIT or FETCH FIRST clause is evaluated after the OFFSET clause:

For the FETCH FIRST clause, the argument ONLY or WITH TIES controls which rows are included in the result set.

If the argument ONLY is specified, the result set is limited to the exact number of leading rows determined by the count.

If the argument WITH TIES is specified, it is required that the ORDER BY clause be present. The result set consists of the same set of leading rows and all of the rows in the same peer group as the last of them (‘ties’) as established by the ordering in the ORDER BY clause. The result set is sorted:

The LIMIT or FETCH FIRST clause restricts the number of rows in the result set.

The following example queries a large table, but the LIMIT clause restricts the output to only have five rows (because the query lacks an ORDER BY, exactly which rows are returned is arbitrary):

LIMIT ALL is the same as omitting the LIMIT clause.

The FETCH FIRST clause supports either the FIRST or NEXT keywords and the ROW or ROWS keywords. These keywords are equivalent and the choice of keyword has no effect on query execution.

If the count is not specified in the FETCH FIRST clause, it defaults to 1:

If the OFFSET clause is present, the LIMIT or FETCH FIRST clause is evaluated after the OFFSET clause:

For the FETCH FIRST clause, the argument ONLY or WITH TIES controls which rows are included in the result set.

If the argument ONLY is specified, the result set is limited to the exact number of leading rows determined by the count.

If the argument WITH TIES is specified, it is required that the ORDER BY clause be present. The result set consists of the same set of leading rows and all of the rows in the same peer group as the last of them (‘ties’) as established by the ordering in the ORDER BY clause. The result set is sorted:

TABLESAMPLE#

There are multiple sample methods:

BERNOULLIEach row is selected to be in the table sample with a probability of the sample percentage. When a table is sampled using the Bernoulli method, all physical blocks of the table are scanned and certain rows are skipped (based on a comparison between the sample percentage and a random value calculated at runtime).

The probability of a row being included in the result is independent from any other row. This does not reduce the time required to read the sampled table from disk. It may have an impact on the total query time if the sampled output is processed further.

SYSTEMThis sampling method divides the table into logical segments of data and samples the table at this granularity. This sampling method either selects all the rows from a particular segment of data or skips it (based on a comparison between the sample percentage and a random value calculated at runtime).

The rows selected in a system sampling will be dependent on which connector is used. For example, when used with Hive, it is dependent on how the data is laid out on HDFS. This method does not guarantee independent sampling probabilities.

Note

Neither of the two methods allow deterministic bounds on the number of rows returned.

Examples:

Using sampling with joins:

There are multiple sample methods:

BERNOULLIEach row is selected to be in the table sample with a probability of the sample percentage. When a table is sampled using the Bernoulli method, all physical blocks of the table are scanned and certain rows are skipped (based on a comparison between the sample percentage and a random value calculated at runtime).

The probability of a row being included in the result is independent from any other row. This does not reduce the time required to read the sampled table from disk. It may have an impact on the total query time if the sampled output is processed further.

SYSTEMThis sampling method divides the table into logical segments of data and samples the table at this granularity. This sampling method either selects all the rows from a particular segment of data or skips it (based on a comparison between the sample percentage and a random value calculated at runtime).

The rows selected in a system sampling will be dependent on which connector is used. For example, when used with Hive, it is dependent on how the data is laid out on HDFS. This method does not guarantee independent sampling probabilities.

Note

Neither of the two methods allow deterministic bounds on the number of rows returned.

Examples:

Using sampling with joins:

UNNEST#

UNNEST can be used to expand an ARRAY or MAP into a relation. Arrays are expanded into a single column:

Maps are expanded into two columns (key, value):

UNNEST can be used in combination with an ARRAY of ROW structures for expanding each field of the ROW into a corresponding column:

UNNEST can optionally have a WITH ORDINALITY clause, in which case an additional ordinality column is added to the end:

UNNEST returns zero entries when the array/map is empty:

UNNEST returns zero entries when the array/map is null:

UNNEST is normally used with a JOIN, and can reference columns from relations on the left side of the join:

UNNEST can also be used with multiple arguments, in which case they are expanded into multiple columns, with as many rows as the highest cardinality argument (the other columns are padded with nulls):

LEFT JOIN is preferable in order to avoid losing the the row containing the array/map field in question when referenced columns from relations on the left side of the join can be empty or have NULL values:

Note that in case of using LEFT JOIN the only condition supported by the current implementation is ON TRUE.

UNNEST can be used to expand an ARRAY or MAP into a relation. Arrays are expanded into a single column:

Maps are expanded into two columns (key, value):

UNNEST can be used in combination with an ARRAY of ROW structures for expanding each field of the ROW into a corresponding column:

UNNEST can optionally have a WITH ORDINALITY clause, in which case an additional ordinality column is added to the end:

UNNEST returns zero entries when the array/map is empty:

UNNEST returns zero entries when the array/map is null:

UNNEST is normally used with a JOIN, and can reference columns from relations on the left side of the join:

UNNEST can also be used with multiple arguments, in which case they are expanded into multiple columns, with as many rows as the highest cardinality argument (the other columns are padded with nulls):

LEFT JOIN is preferable in order to avoid losing the the row containing the array/map field in question when referenced columns from relations on the left side of the join can be empty or have NULL values:

Note that in case of using LEFT JOIN the only condition supported by the current implementation is ON TRUE.

Joins#

Joins allow you to combine data from multiple relations.

Joins allow you to combine data from multiple relations.

CROSS JOIN#

A cross join returns the Cartesian product (all combinations) of two relations. Cross joins can either be specified using the explit CROSS JOIN syntax or by specifying multiple relations in the FROM clause.

Both of the following queries are equivalent:

The nation table contains 25 rows and the region table contains 5 rows, so a cross join between the two tables produces 125 rows:

A cross join returns the Cartesian product (all combinations) of two relations. Cross joins can either be specified using the explit CROSS JOIN syntax or by specifying multiple relations in the FROM clause.

Both of the following queries are equivalent:

The nation table contains 25 rows and the region table contains 5 rows, so a cross join between the two tables produces 125 rows:

LATERAL#

Subqueries appearing in the FROM clause can be preceded by the keyword LATERAL. This allows them to reference columns provided by preceding FROM items.

A LATERAL join can appear at the top level in the FROM list, or anywhere within a parenthesized join tree. In the latter case, it can also refer to any items that are on the left-hand side of a JOIN for which it is on the right-hand side.

When a FROM item contains LATERAL cross-references, evaluation proceeds as follows: for each row of the FROM item providing the cross-referenced columns, the LATERAL item is evaluated using that row set’s values of the columns. The resulting rows are joined as usual with the rows they were computed from. This is repeated for set of rows from the column source tables.

LATERAL is primarily useful when the cross-referenced column is necessary for computing the rows to be joined:

Subqueries appearing in the FROM clause can be preceded by the keyword LATERAL. This allows them to reference columns provided by preceding FROM items.

A LATERAL join can appear at the top level in the FROM list, or anywhere within a parenthesized join tree. In the latter case, it can also refer to any items that are on the left-hand side of a JOIN for which it is on the right-hand side.

When a FROM item contains LATERAL cross-references, evaluation proceeds as follows: for each row of the FROM item providing the cross-referenced columns, the LATERAL item is evaluated using that row set’s values of the columns. The resulting rows are joined as usual with the rows they were computed from. This is repeated for set of rows from the column source tables.

LATERAL is primarily useful when the cross-referenced column is necessary for computing the rows to be joined:

A lateral join behaves more like a correlated subquery than like most JOINs. A lateral join behaves as if the server executed a loop similar to the following:

Qualifying column names#

When two relations in a join have columns with the same name, the column references must be qualified using the relation alias (if the relation has an alias), or with the relation name:

The following query will fail with the error Column 'name' is ambiguous:

When two relations in a join have columns with the same name, the column references must be qualified using the relation alias (if the relation has an alias), or with the relation name:

The following query will fail with the error Column 'name' is ambiguous:

Subqueries#

A subquery is an expression which is composed of a query. The subquery is correlated when it refers to columns outside of the subquery. Logically, the subquery will be evaluated for each row in the surrounding query. The referenced columns will thus be constant during any single evaluation of the subquery.

Note

Support for correlated subqueries is limited. Not every standard form is supported.

A subquery is an expression which is composed of a query. The subquery is correlated when it refers to columns outside of the subquery. Logically, the subquery will be evaluated for each row in the surrounding query. The referenced columns will thus be constant during any single evaluation of the subquery.

Note

Support for correlated subqueries is limited. Not every standard form is supported.

EXISTS#

The EXISTS predicate determines if a subquery returns any rows:

The EXISTS predicate determines if a subquery returns any rows:

IN#

The IN predicate determines if any values produced by the subquery are equal to the provided expression. The result of IN follows the standard rules for nulls. The subquery must produce exactly one column:

The IN predicate determines if any values produced by the subquery are equal to the provided expression. The result of IN follows the standard rules for nulls. The subquery must produce exactly one column:

Scalar subquery#

A scalar subquery is a non-correlated subquery that returns zero or one row. It is an error for the subquery to produce more than one row. The returned value is NULL if the subquery produces no rows:

SELECT name

FROM nation

WHERE regionkey = (SELECT max(regionkey) FROM region);

A scalar subquery is a non-correlated subquery that returns zero or one row. It is an error for the subquery to produce more than one row. The returned value is NULL if the subquery produces no rows:

SELECT name

FROM nation

WHERE regionkey = (SELECT max(regionkey) FROM region);SHOW CATALOGS LIKE 't%'

SHOW COLUMNS FROM table [ LIKE pattern ]

SHOW GRANTS ON TABLE orders;

SHOW TABLES FROM tpch.tiny LIKE 'p%'; SHOW SCHEMAS FROM tpch LIKE '__3%'

INSERT

Insert a single row into the cities table:

Insert multiple rows into the cities table:

Insert a single row into the nation table with the specified column list:

INSERT INTO nation (nationkey, name, regionkey, comment)

VALUES (26, 'POLAND', 3, 'no comment');DELETE FROM lineitem WHERE shipmode = 'AIR';

UPDATE customers SET account_manager = 'John Henry', assign_date = now();

TRUNCATE TABLE orders;

MERGE#

Synopsis#

where when_clause is one of

Description#

Conditionally update and/or delete rows of a table and/or insert new rows into a table.

MERGE supports an arbitrary number of WHEN clauses with different MATCHED conditions, executing the DELETE, UPDATE or INSERT operation in the first WHEN clause selected by the MATCHED state and the match condition.

For each source row, the WHEN clauses are processed in order. Only the first first matching WHEN clause is executed and subsequent clauses are ignored. A MERGE_TARGET_ROW_MULTIPLE_MATCHES exception is raised when a single target table row matches more than one source row.

If a source row is not matched by any WHEN clause and there is no WHEN NOT MATCHED clause, the source row is ignored.

In WHEN clauses with UPDATE operations, the column value expressions can depend on any field of the target or the source. In the NOT MATCHED case, the INSERT expressions can depend on any field of the source.

Examples#

Delete all customers mentioned in the source table:

For matching customer rows, increment the purchases, and if there is no match, insert the row from the source table:

MERGE into the target table from the source table, deleting any matching target row for which the source address is Centreville. For all other matching rows, add the source purchases and set the address to the source address, if there is no match in the target table, insert the source table row:

MERGE INTO accounts t USING monthly_accounts_update s

ON (t.customer = s.customer)

WHEN MATCHED AND s.address = 'Centreville'

THEN DELETE

WHEN MATCHED

THEN UPDATE

SET purchases = s.purchases + t.purchases, address = s.address

WHEN NOT MATCHED

THEN INSERT (customer, purchases, address)

VALUES(s.customer, s.purchases, s.address)

Materialized view management#

A materialized view is a pre-computed data set derived from a query specification (the SELECT in the view definition) and stored for later use.Create a simple materialized view cancelled_orders over the orders table that only includes cancelled orders. Note that orderstatus is a numeric value that is potentially meaningless to a consumer, yet the name of the view clarifies the content:

Create or replace a materialized view order_totals_by_date that summarizes orders across all orders from all customers:

Create a materialized view for a catalog using the Iceberg connector, with a comment and partitioning on two fields in the storage:

Set multiple properties:

Show defined materialized view properties for all catalogs:

Show metadata about the materialized views in all catalogs:

Rename materialized view people to users in the current schema:

Rename materialized view people to users, if materialized view people exists in the current catalog and schema:

Set view properties (x = y) in materialized view people:

Set multiple view properties (foo = 123 and foo bar = 456) in materialized view people:

Set view property x to its default value in materialized view people:

ALTER MATERIALIZED VIEW people SET PROPERTIES x = DEFAULT;

MATERIALIZEDSchema and table management

CREATE TABLE#

Synopsis#

CREATE TABLE [ IF NOT EXISTS ]

table_name (

{ column_name data_type [ NOT NULL ]

[ COMMENT comment ]

[ WITH ( property_name = expression [, ...] ) ]

| LIKE existing_table_name

[ { INCLUDING | EXCLUDING } PROPERTIES ]

}

[, ...]

)

[ COMMENT table_comment ]

[ WITH ( property_name = expression [, ...] ) ]

Description#

Create a new, empty table with the specified columns. Use CREATE TABLE AS to create a table with data.

The optional IF NOT EXISTS clause causes the error to be suppressed if the table already exists.

The optional WITH clause can be used to set properties on the newly created table or on single columns. To list all available table properties, run the following query:

To list all available column properties, run the following query:

The LIKE clause can be used to include all the column definitions from an existing table in the new table. Multiple LIKE clauses may be specified, which allows copying the columns from multiple tables.

If INCLUDING PROPERTIES is specified, all of the table properties are copied to the new table. If the WITH clause specifies the same property name as one of the copied properties, the value from the WITH clause will be used. The default behavior is EXCLUDING PROPERTIES. The INCLUDING PROPERTIES option maybe specified for at most one table.

Examples#

Create a new table orders:

Create the table orders if it does not already exist, adding a table comment and a column comment:

Create the table bigger_orders using the columns from orders plus additional columns at the start and end:

Create a new table orders_column_aliased with the results of a query and the given column names:

Create a new table orders_by_date that summarizes orders:

Create the table orders_by_date if it does not already exist:

Create a new empty_nation table with the same schema as nation and no data:

Drop the table orders_by_date: